Hi,

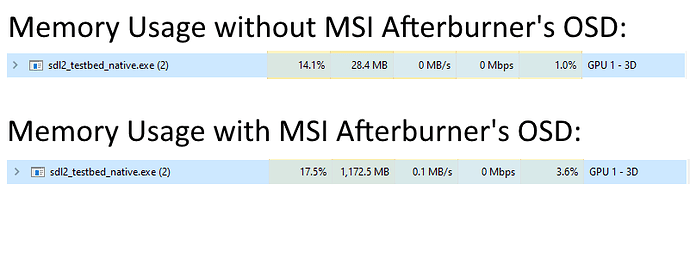

We have been trying to initialize 2 window with SDL2 (the latest version of this time, 2.0.22), however, a huge memory leak appears when we do this.

This can be achieved just by creating two OpenGL (any version & either core or compatibility mode) contexts and drawing with either SKIA or direct OpenGL.

The sample code only contains 2 functions, main and paint. Paint first makes the context of the current window current (SDL_GL_MakeCurrent), then draws a Hello Triangle with vertices, then we swap the buffers with SDL_GL_SwapWindow (flush and finish are there too, tested with and without, so we did not forget those).

On some Windows systems, the leak first happens 1 mb per a couple of frames, after a couple of seconds, the memory leak jumps to 1 mb per frame, increasing 100-200 mb per second until it crashes DWM or the entire OS.

As a note, when this happens, the fps is on the ground as well, not going above 100-150. When we have only one window, the fps is around 2500, with 2 simple windows drawing only a hello triangle, it drops to 100 fps in both windows’.

– DEBUGGING NOTES –

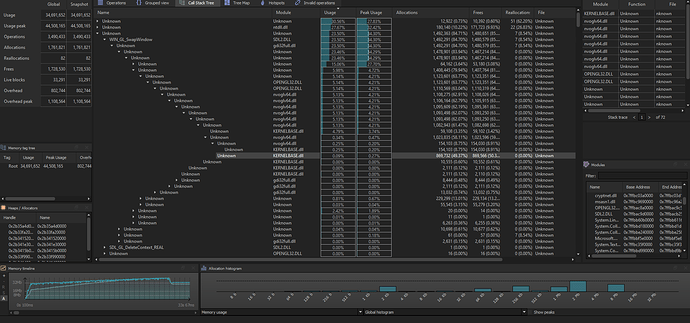

Debugged in Visual Studio, the heap allocations seem to be as less as 30-40 mb during the process using way beyond that, such as 1500 2000 mb.

The memory jumps 1 mb every frame after SDL_GL_SwapWindow. Can be seen by debugging in Visual Studio and stepping one by one when the leak is happening.

Tested in VM, 2 GPUs, 3 drivers.

In VM: Does not seem to happen (only been able to test with a fake OpenGL driver which renders with CPU). However, the leak continues to happen even with a fake OpenGL driver (software) on the non-VM systems.

IMPORTANT NOTE: After some time (we allowed the process to eat as much as it can), it stopped growing after 2400 mb, then started to go down to 30 mb, although the ram usage of the computer continued to increase until we terminate the process. The leak does not seem to happen from the process after that time, it is leaking somewhere else (e.g. a kernel-mode driver). Just to make sure, the leak goes away and the ram drops back to its usual value after we terminate the process.

We tried to use wglMakeCurrent and wglSwapLayerBuffers directly without bothering with SDL2, but the leak continues to happen.

CPU (if required): i5 10400 and i7 6800k

GPU: GTX 1050 and GTX 1080 ti

Seem to happen in both computers, both are Windows 10, os versions below for each GPU:

GTX 1050: Microsoft Windows - Version 21H1 (OS Build 19043.1526)

GTX 1080 TI: Microsoft Windows - Version 21H1 (OS Buil 19043.1706)

– FURTHER INFORMATION –

SDL Initialization Flags (First Test): SDL_INIT_VIDEO | SDL_INIT_EVENTS

SDL Initialization Flags (Second Test): SDL_INIT_EVENTS

SDL Initialization Flags (Third Test): 0

SDL Window Flags (First Test): SDL_WINDOW_RESIZABLE | SDL_WINDOW_OPENGL

SDL Window Flags (Second Test): SDL_WINDOW_OPENGL

SDL Window Creation Hints (First Test): [SDL_GL_CONTEXT_MAJOR_VERSION, 3], [SDL_GL_CONTEXT_MINOR_VERSION, 2], [SDL_GL_DOUBLEBUFFER, 1], [SDL_GL_DEPTH_SIZE, 24], [SDL_GL_STENCIL_SIZE, 8], [SDL_GL_RED_SIZE, 8], [SDL_GL_GREEN_SIZE, 8], [SDL_GL_BLUE_SIZE, 8], [SDL_GL_ALPHA_SIZE, 8], [SDL_GL_FRAMEBUFFER_SRGB_CAPABLE, 1]

SDL Window Creation Hints (Second Test): [SDL_GL_CONTEXT_MAJOR_VERSION, 3], [SDL_GL_CONTEXT_MINOR_VERSION, 2]

SDL Window Creation Hints (Third Test): 0

Window Creation Function: SDL_CreateWindow

Context Creation Function used: SDL_GL_CreateContext

These leaks do not happen in a similar api to SDL2 like GLFW, and all other applications work as it supposed to, noting this to clarify that there is no issue with the hardware or the computer directly.

We have tested all these in 2 days, and so far, no solution has yet.

We tried to use OpenGL debugging, Visual Studio Debugging, RenderDoc, and memory profilers, but none shows anything. Even in RenderDoc, the process does not seem to have any leaks when started under it.

Any help would be appreciated,

Thanks.