Hi,

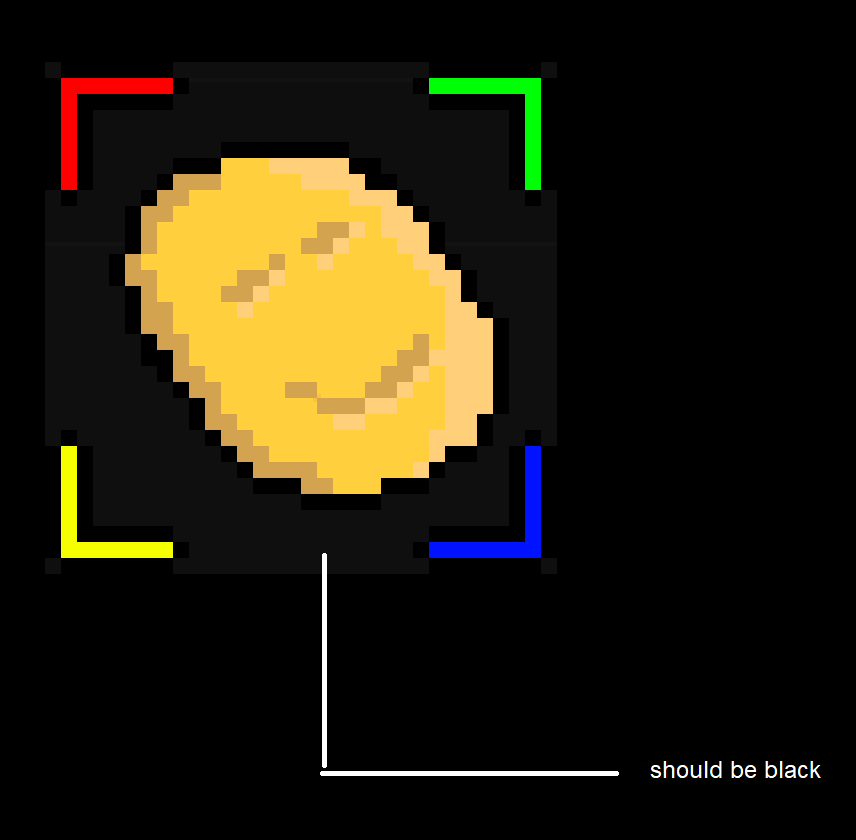

I try to draw a texture to another texture and I get a gray color in place of the alpha = 0

I tried using blend mode all over the place but this gets confusing

thanks for your help

void DrawTextureToTexture(SDL_Renderer* renderer, SDL_Texture* srcTexture, SDL_Texture* dstTexture, int x, int y) {

if (!renderer || !srcTexture || !dstTexture) {

SDL_Log("Invalid arguments to DrawTextureToTexture");

return;

}

// Get source texture size

float srcW, srcH;

if (!SDL_GetTextureSize(srcTexture, &srcW, &srcH)) {

SDL_Log("Failed to get texture size: %s", SDL_GetError());

return;

}

int w = static_cast<int>(srcW);

int h = static_cast<int>(srcH);

// Create temporary render target texture

SDL_Texture* temp = SDL_CreateTexture(renderer, SDL_PIXELFORMAT_RGBA8888, SDL_TEXTUREACCESS_TARGET, w, h);

if (!temp) {

SDL_Log("Failed to create temp texture: %s", SDL_GetError());

return;

}

SDL_SetTextureBlendMode(srcTexture, SDL_BLENDMODE_BLEND);

SDL_SetTextureBlendMode(temp, SDL_BLENDMODE_BLEND);

SDL_SetRenderDrawBlendMode(renderer, SDL_BLENDMODE_NONE);

// Render srcTexture into temp texture

SDL_Texture* originalTarget = SDL_GetRenderTarget(renderer);

SDL_SetRenderTarget(renderer, temp);

SDL_SetRenderDrawColor(renderer, 0,0,0,0);

SDL_RenderClear(renderer); // Clear the current rendering target with the drawing color.

SDL_RenderTexture(renderer, srcTexture, nullptr, nullptr); // Copy a portion of the texture to the current rendering target at subpixel precision

SDL_SetRenderTarget(renderer, originalTarget);

// Read pixels from temp texture into surface

SDL_SetRenderTarget(renderer, temp);

SDL_Surface* rawSurface = SDL_RenderReadPixels(renderer, nullptr); // Read pixels from temp

SDL_SetRenderTarget(renderer, originalTarget);

if (!rawSurface) {

SDL_Log("Failed to read pixels: %s", SDL_GetError());

SDL_DestroyTexture(temp);

return;

}

// Convert surface to known RGBA32 format

SDL_Surface* surface = SDL_ConvertSurface(rawSurface, SDL_PIXELFORMAT_RGBA8888);

SDL_DestroySurface(rawSurface);

if (!surface) {

SDL_Log("Failed to convert surface to RGBA32: %s", SDL_GetError());

SDL_DestroyTexture(temp);

return;

}

// Manually swap R/B channels if needed (assume BGRA to RGBA)

Uint8* srcPixels = static_cast<Uint8*>(surface->pixels);

SDL_SetTextureBlendMode(dstTexture, SDL_BLENDMODE_BLEND);

SDL_SetTextureAlphaMod(dstTexture, 255);

// Lock destination texture

void* dstPixels = nullptr;

int dstPitch = 0;

if (!SDL_LockTexture(dstTexture, nullptr, &dstPixels, &dstPitch)) {

SDL_Log("Failed to lock dstTexture: %s", SDL_GetError());

SDL_DestroySurface(surface);

SDL_DestroyTexture(temp);

return;

}

// Copy to destination

Uint8* dstBytes = static_cast<Uint8*>(dstPixels);

for (int row = 0; row < h; ++row) {

memcpy(

dstBytes + (y + row) * dstPitch + x * 4,

srcPixels + row * surface->pitch,

w * 4

);

}

SDL_UnlockTexture(dstTexture);

SDL_DestroySurface(surface);

SDL_DestroyTexture(temp);

}