So I have this gradient that I’m very happy with in the GIMP. It looks

all nice and smooth. I save it as a .png . I reload it in the GIMP and

it still looks smooth. I load it with showimage and all sorts of banding

occurs. This is (I think) somewhat understandable. My X server is

running at 16 bit color, and GIMP saves .pngs in 32bit color (right? Is

there a way to change this?), so showimage dithers the .png to display

it on its 15 bit surface and banding occurs, right?

But why isn’t that banding present when displayed in the GIMP?

Presumably it must needs dither the image to 16bit color as well seeing as

that’s what my X server is running at. Is the GIMP just using a

different, higher quality algorithm to do this? or am I missing something

all together different and important?

I’d be happy just saving the image in 16bit color so that dithering isn’t

necessary, but I don’t know how. Tips on that would also be appreciated.

Cheers,

-Josh–

“Ittai koko wa doko nan da!”

wrote in message

news:Pine.BSF.4.21.0107062326020.92511-100000 at jusenkyo.skia.net…

So I have this gradient that I’m very happy with in the GIMP. It looks

all nice and smooth. I save it as a .png . I reload it in the GIMP and

it still looks smooth. I load it with showimage and all sorts of banding

occurs. This is (I think) somewhat understandable. My X server is

running at 16 bit color, and GIMP saves .pngs in 32bit color (right? Is

there a way to change this?), so showimage dithers the .png to display

it on its 15 bit surface and banding occurs, right?

But why isn’t that banding present when displayed in the GIMP?

“Dithering” = placing pixels that are too bright next to pixels that are too

dark, thereby creating the illusion of intermediate colors. GIMP performs

dithering. SDL doesn’t: it just picks the closest color.–

Rainer Deyke (root at rainerdeyke.com)

Shareware computer games - http://rainerdeyke.com

“In ihren Reihen zu stehen heisst unter Feinden zu kaempfen” - Abigor

skia at skia.net wrote:

I’d be happy just saving the image in 16bit color so that dithering isn’t

necessary, but I don’t know how. Tips on that would also be appreciated.

I’m having the same problem, and just posted about it a few hours ago.

Basically, the problem isn’t dithering at all. I’m not sure what it is,

but when I read your post I decided to test it, and saved the image in

8-bit for running on a 16-bit x-server, and the problem was still there.

I don’t have a clue what is going on. Other complex color patterns seem

to show up OK, SDL_Image just does not like gradients for some reason.

to show up OK, SDL_Image just does not like gradients for some reason.

Uh, so are you going to email me that image, so I can debug it?

–ryan.

“Ryan C. Gordon” wrote:

to show up OK, SDL_Image just does not like gradients for some reason.

Uh, so are you going to email me that image, so I can debug it?

I already did, didn’t you get it? Don’t tell me my mail server is messed

up I just spent 40 minutes typed an email! Sigh I’ll try to send it

along again.–

“I’m willing to J.O.B., just not on no jagg-off shoe-shine tip”

“Jagg-off shoe-shine tip?”

“No background-checking jagg-off shoe-shine tip”

(sorry if this is a re-post, but it’s been a day now and I haven’t seen

this go on the list yet… and I don’t think the mail servers are THAT

slow…)

So I have this gradient that I’m very happy with in the GIMP. It looks

all nice and smooth. I save it as a .png . I reload it in the GIMP and

it still looks smooth. I load it with showimage and all sorts of banding

occurs. This is (I think) somewhat understandable. My X server is

running at 16 bit color, and GIMP saves .pngs in 32bit color (right? Is

there a way to change this?), so showimage dithers the .png to display

it on its 15 bit surface and banding occurs, right?

But why isn’t that banding present when displayed in the GIMP?

Presumably it must needs dither the image to 16bit color as well seeing as

that’s what my X server is running at. Is the GIMP just using a

different, higher quality algorithm to do this? or am I missing something

all together different and important?

I’d be happy just saving the image in 16bit color so that dithering isn’t

necessary, but I don’t know how. Tips on that would also be appreciated.

Cheers,

-Josh–

“Ittai koko wa doko nan da!”

Have you tries different X modes/depth? Have you tried different image

apps apart from Gimp? Netscape even? What does SDL report about the depth

of your X screen?On Sat, 7 Jul 2001 skia at skia.net wrote:

(sorry if this is a re-post, but it’s been a day now and I haven’t seen

this go on the list yet… and I don’t think the mail servers are THAT

slow…)

So I have this gradient that I’m very happy with in the GIMP. It looks

all nice and smooth. I save it as a .png . I reload it in the GIMP and

it still looks smooth. I load it with showimage and all sorts of banding

occurs. This is (I think) somewhat understandable. My X server is

running at 16 bit color, and GIMP saves .pngs in 32bit color (right? Is

there a way to change this?), so showimage dithers the .png to display

it on its 15 bit surface and banding occurs, right?

But why isn’t that banding present when displayed in the GIMP?

Presumably it must needs dither the image to 16bit color as well seeing as

that’s what my X server is running at. Is the GIMP just using a

different, higher quality algorithm to do this? or am I missing something

all together different and important?

I’d be happy just saving the image in 16bit color so that dithering isn’t

necessary, but I don’t know how. Tips on that would also be appreciated.

Cheers,

-Josh

–

“Ittai koko wa doko nan da!”

So I have this gradient that I’m very happy with in the GIMP. It looks

all nice and smooth. I save it as a .png . I reload it in the GIMP and

it still looks smooth. I load it with showimage and all sorts of banding

occurs. This is (I think) somewhat understandable. My X server is

running at 16 bit color, and GIMP saves .pngs in 32bit color (right? Is

there a way to change this?), so showimage dithers the .png to display

it on its 15 bit surface and banding occurs, right?

But why isn’t that banding present when displayed in the GIMP?

“Dithering” = placing pixels that are too bright next to pixels that are too

dark, thereby creating the illusion of intermediate colors. GIMP performs

dithering. SDL doesn’t: it just picks the closest color.

Ah, yes. That makes sense.

Thanks for everyone’s advice with this. The best work-around I have found

thus far is to take a screen shot of the gradient as it is being displayed

by GIMP or some other program that dithers the gradient for display in 16

bit color, and then save that screen shot as a png. This way SLD_Image

still “converts” the image to 16bit, but doesn’t need to substitute any

colors because it’s been dithered to the 16 bit colorspace already (even

though the png itself is natively 32bits).

This is, however, horribly cludgy. Anyone know of a program, or a plugin

for GIMP, or SOMETHING that will dither a 32 bit image to 16bits for you

and let you SAVE that dithered image?

Cheers,

-Josh–

“Ittai koko wa doko nan da!”

skia at skia.net wrote:

Thanks for everyone’s advice with this. The best work-around I have found

thus far is to take a screen shot of the gradient as it is being displayed

by GIMP or some other program that dithers the gradient for display in 16

bit color, and then save that screen shot as a png. This way SLD_Image

still “converts” the image to 16bit, but doesn’t need to substitute any

colors because it’s been dithered to the 16 bit colorspace already (even

though the png itself is natively 32bits).

Wow, your right that does work, thanks! But how do you account for the

black and yellow border gimp puts around images, when I take the

screenshot that covers up my own border for the image, I have to zoom in

so the single pixel border gimp puts doesn’t cover up my single pixel

border, then take a screenshot, then crop it, and shrink it.

This is, however, horribly cludgy. Anyone know of a program, or a plugin

for GIMP, or SOMETHING that will dither a 32 bit image to 16bits for you

and let you SAVE that dithered image?

I think I am going to ask the GIMP mailing list. Saving as 16bit should

certainly be an options. Someone mentioned that TGA is 16bits natively

but saving as TGA doesn’t work either. I’m fairly certain that TGA has

16bits as an option, but 24 and 32 as well. Gimp seems to just save at

the highest depth possible.

I’ll post here if I find out anything.

Wow, your right that does work, thanks! But how do you account for the

black and yellow border gimp puts around images, when I take the

screenshot that covers up my own border for the image, I have to zoom in

so the single pixel border gimp puts doesn’t cover up my single pixel

border, then take a screenshot, then crop it, and shrink it.

Use the convert program from ImageMagick.

You can do just about any important image conversion (effects and convert

between data types: gif->png, etc.).

And, you can do it in batch:

for feh in *.jpg ; do convert -colors 65536 -dither $feh $feh.converted ; mv $feh.converted $feh ; done

(Completely untested, but that might do it.)

–ryan.

Thanks for everyone’s advice with this. The best work-around I have found

thus far is to take a screen shot of the gradient as it is being displayed

by GIMP or some other program that dithers the gradient for display in 16

bit color, and then save that screen shot as a png.

Not a bad workaround if you just have a couple of images that you don’t

need to edit often

This is, however, horribly cludgy. Anyone know of a program, or a plugin

for GIMP, or SOMETHING that will dither a 32 bit image to 16bits for you

and let you SAVE that dithered image?

see if the netpbm tools or imagemagick can do that. Otherwise write your

own ditherer and you might even learn something. Try a Floyd-Steinberg

dither for a start — you should find plenty of information in textbooks

and even on the net. You can probably get even better results with a more

sophisticated dither but for 24->16 a FS is probably good enough

I suggest you either use SDL for your converter (with SDL_image for

loading, and saving them in BMP or TGA), or convert the images into

PPM (which is very easy to read and write) and do all your operations

on that, skipping SDL

This is, however, horribly cludgy. Anyone know of a program, or a plugin

for GIMP, or SOMETHING that will dither a 32 bit image to 16bits for you

and let you SAVE that dithered image?

Thought of looking at GIMP sources ?-)

– Timo Suoranta – @Timo_K_Suoranta –

One thing you do need to remember- the gradiation is actually an artifact of

the graphic program you used to create it (GIMP). If you are converting the

image to the format of the screen (SDL_DisplayFormat or SDL_ConvertSurface)

you may lose that gradiation because you will be reduce the number of colors

in the image.

One thing you can do (and there are others) is just not convert the surface

before using it. This will slow down your blits, but keep your gradiation.On Saturday 07 July 2001 04:41, John Garrison wrote:

skia at skia.net wrote:

I’d be happy just saving the image in 16bit color so that dithering isn’t

necessary, but I don’t know how. Tips on that would also be appreciated.

I’m having the same problem, and just posted about it a few hours ago.

Basically, the problem isn’t dithering at all. I’m not sure what it is,

but when I read your post I decided to test it, and saved the image in

8-bit for running on a 16-bit x-server, and the problem was still there.

I don’t have a clue what is going on. Other complex color patterns seem

to show up OK, SDL_Image just does not like gradients for some reason.

–

Sam “Criswell” Hart <@Sam_Hart> AIM, Yahoo!:

Homepage: < http://www.geekcomix.com/snh/ >

PGP Info: < http://www.geekcomix.com/snh/contact/ >

Advogato: < http://advogato.org/person/criswell/ >

I think this is the second time this has been mentioned, and it’s confused

me both times. If there’s an image in, say, a 24bit format, and it needs

to be blit to a 16bit surface, then doesn’t the blitter have to convert

the image anyway? I mean, a 24bit image can clearly not be displayed on a

16bit surface, and the blitter isn’t just dropping of the extra bits, so

some sort of conversions is taking place. I thought the point of

converting the image was to take this repetitive time consuming task

(converting 24->16 each blit) and make it a one-time time-consuming task

(convert 24->16, and then blit 16 without conversion). Am I totally

smoking ether here?

At any rate, whether I convert the 24bit image to my 16bit surface or not,

I get the same result. I mean resultant image looks identical whether I

convert it first and then pass it to the blitter, or whether I let the

blitter deal with a full 24bit image however it may. So at least

something of what you say above would seem to be wrong.

Cheers,

-Josh> On Saturday 07 July 2001 04:41, John Garrison wrote:

@Josh_Emmons wrote:

I’d be happy just saving the image in 16bit color so that dithering isn’t

necessary, but I don’t know how. Tips on that would also be appreciated.

I’m having the same problem, and just posted about it a few hours ago.

Basically, the problem isn’t dithering at all. I’m not sure what it is,

but when I read your post I decided to test it, and saved the image in

8-bit for running on a 16-bit x-server, and the problem was still there.

I don’t have a clue what is going on. Other complex color patterns seem

to show up OK, SDL_Image just does not like gradients for some reason.

One thing you do need to remember- the gradiation is actually an artifact of

the graphic program you used to create it (GIMP). If you are converting the

image to the format of the screen (SDL_DisplayFormat or SDL_ConvertSurface)

you may lose that gradiation because you will be reduce the number of colors

in the image.

One thing you can do (and there are others) is just not convert the surface

before using it. This will slow down your blits, but keep your gradiation.

–

“Ittai koko wa doko nan da!”

At any rate, whether I convert the 24bit image to my 16bit surface or not,

I get the same result. I mean resultant image looks identical whether I

convert it first and then pass it to the blitter, or whether I let the

blitter deal with a full 24bit image however it may. So at least

something of what you say above would seem to be wrong.

SDL_BlitSurface() will convert on-the-fly, if it needs to. It is

preferable to convert ahead of time, if that’s feasible. That’s what

SDL_ConvertSurface() is for.

I still think that dithering in ConvertSurface() (which is NOT called at

blit time, if I’m correct) wouldn’t be such a bad idea, but, as someone

said, you can write a dithering routine yourself in about 20 minutes.

–ryan.

Sorry… must not be explaining myself very well

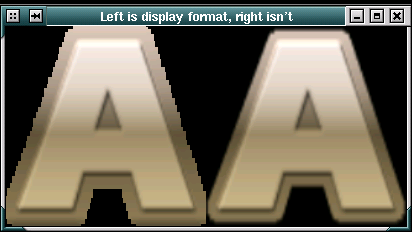

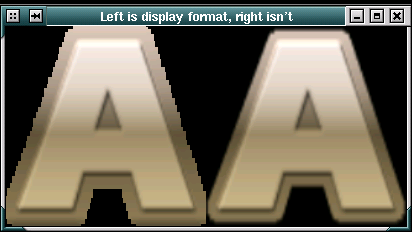

So I’ve come up with a quick and dirty example. You can find the example code

here:

http://www.geekcomix.com/snh/files/demos/gradtest.tar.gz

And a screenshot of the example here:

Note that (as I said before) this may not be what your problem in the code

is. It just sounded to me like it may be. Also note that this example does

not even consider SDL_DisplayFormatAlpha().On Monday 09 July 2001 19:22, skia at skia.net wrote:

I think this is the second time this has been mentioned, and it’s confused

me both times.

–

Sam “Criswell” Hart <@Sam_Hart> AIM, Yahoo!:

Homepage: < http://www.geekcomix.com/snh/ >

PGP Info: < http://www.geekcomix.com/snh/contact/ >

Advogato: < http://advogato.org/person/criswell/ >

Not that I have any authority here, but…

I’d have the blitter do a nearest-pixel algorithm for the 24->16 blit

and expect the programmer to do something smarter (interpolate, floyd

steinberg) if they want to. Why bloat the blitter?On Mon, Jul 09, 2001 at 03:22:43PM -0400, skia at skia.net wrote:

I think this is the second time this has been mentioned, and it’s confused

me both times. If there’s an image in, say, a 24bit format, and it needs

to be blit to a 16bit surface, then doesn’t the blitter have to convert

the image anyway? I mean, a 24bit image can clearly not be displayed on a

16bit surface, and the blitter isn’t just dropping of the extra bits, so

some sort of conversions is taking place. I thought the point of

converting the image was to take this repetitive time consuming task

(converting 24->16 each blit) and make it a one-time time-consuming task

(convert 24->16, and then blit 16 without conversion). Am I totally

smoking ether here?

–

The more I know about the WIN32 API the more I dislike it. It is complex and

for the most part poorly designed, inconsistent, and poorly documented.

- David Korn

I’d have the blitter do a nearest-pixel algorithm for the 24->16 blit

and expect the programmer to do something smarter (interpolate, floyd

steinberg) if they want to. Why bloat the blitter?

You sum it up quite well. It’s the same reason why we don’t coalesce

rectangles in SDL_UpdateRects(): the programmer knows more about what

will happen at runtime and can use this fact to his advantage.

Most of the time, the 24->16 conversion needs no dithering since enough

bits are kept; only with stretched gradients in static images

the difference is noticeable (so it is fairly rare in games).

It is way more efficient to pre-dither these when needed, and in the

rare case that such gradients are generated on the fly, the programmer

should know better how to take care of it

The {24,16}->8 case is way more troublesome and guess what, we leave that

for the programmer as well