I’ve found that many explanations about SDL_Texture says it store texture in VRAM. However, I found that SDL_Texture's data is actually stored in regular RAM, and is copied to GPU in SDL_RenderPresent after drawing it with SDL_RenderCopy for the first time. A simple example is as follows.

#include <cstdio>

#include <chrono>

#include <SDL2/SDL.h>

#include <SDL2/SDL_image.h>

using namespace std;

using namespace std::chrono;

int main()

{

SDL_Init(SDL_INIT_EVERYTHING);

auto window_ptr = SDL_CreateWindow("SDL2", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED, 1920, 1080, NULL);

auto renderer_ptr = SDL_CreateRenderer(window_ptr, -1, NULL);

auto texture_ptr = IMG_LoadTexture(renderer_ptr, /*a 1920x1080 image*/);

SDL_Rect src = { 0, 0, 1920, 1080 }, dst = { 0,0,1920,1080 };

SDL_Event event;

bool running = true;

while (running)

{

SDL_PollEvent(&event);

switch (event.type)

{

case SDL_QUIT:

running = false;

break;

default:

break;

}

SDL_RenderCopy(renderer_ptr, texture_ptr, &src, &dst);

auto&& t0 = system_clock::now();

SDL_RenderPresent(renderer_ptr);

auto&& t1 = system_clock::now();

printf("%dus\n", duration_cast<microseconds>(t1 - t0).count());

}

SDL_DestroyRenderer(renderer_ptr);

SDL_DestroyWindow(window_ptr);

SDL_Quit();

}

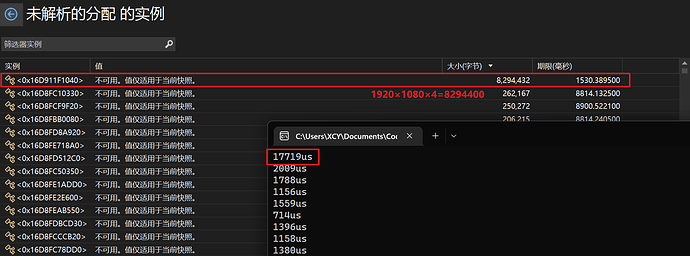

Before IMG_LoadTexture, the memory occupied by the program was low. After loading the texture, the memory increased rapidly and an instance as big as the image (1920x1080x4) appeared. Shouldn’t the data of the texture be stored in GPU?

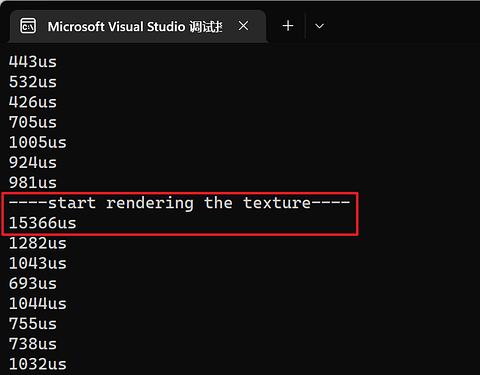

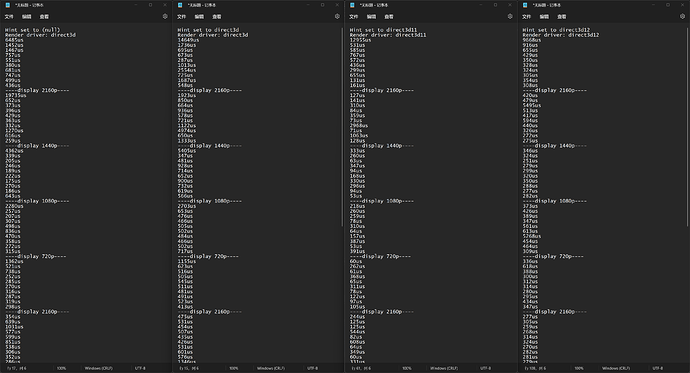

Then comes the texture rendering. I turned off vertical synchronization so I could see how long each rendering took. The first SDL_RenderPresent took a full 17ms to render, while the others took only about 1ms. I believe that the texture data was not copied to GPU until the first call to SDL_RenderPresent.

So what does "SDL_Texture stores data in VRAM" mean? Is there any parameter I have not set correctly? If it’s true that textures can only be copied to GPU in SDL_RenderPresent, what should I do with large textures in game development? In the game I’m developing, there is a 2544x2016 size image, and the first time it renders would take a full 30ms, which directly causes a frame drop.