I am a newbie in SDL2 and I was testing an example code of Lazy Foo tutorial just out of curiosity. The program renders an image named x.bmp living in the same folder as the program. Here’s the example program testsdl2.cxx:

/*This source code copyrighted by Lazy Foo' Productions (2004-2022)

and may not be redistributed without written permission.*/

//Using SDL and standard IO

#include <SDL2/SDL.h>

#include <stdio.h>

//Screen dimension constants

const int SCREEN_WIDTH = 640;

const int SCREEN_HEIGHT = 480;

//Starts up SDL and creates window

bool init();

//Loads media

bool loadMedia();

//Frees media and shuts down SDL

void close();

//The window we'll be rendering to

SDL_Window* gWindow = NULL;

//The surface contained by the window

SDL_Surface* gScreenSurface = NULL;

//The image we will load and show on the screen

SDL_Surface* gXOut = NULL;

bool init()

{

//Initialization flag

bool success = true;

//Initialize SDL

if( SDL_Init( SDL_INIT_VIDEO ) < 0 )

{

printf( "SDL could not initialize! SDL_Error: %s\n", SDL_GetError() );

success = false;

}

else

{

//Create window

gWindow = SDL_CreateWindow( "SDL Tutorial", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED, SCREEN_WIDTH, SCREEN_HEIGHT, SDL_WINDOW_SHOWN );

if( gWindow == NULL )

{

printf( "Window could not be created! SDL_Error: %s\n", SDL_GetError() );

success = false;

}

else

{

//Get window surface

gScreenSurface = SDL_GetWindowSurface( gWindow );

}

}

return success;

}

bool loadMedia()

{

//Loading success flag

bool success = true;

//Load splash image

gXOut = SDL_LoadBMP( "x.bmp" );

if( gXOut == NULL )

{

printf( "Unable to load image %s! SDL Error: %s\n", "03_event_driven_programming/x.bmp", SDL_GetError() );

success = false;

}

return success;

}

void close()

{

//Deallocate surface

SDL_FreeSurface( gXOut );

gXOut = NULL;

//Destroy window

SDL_DestroyWindow( gWindow );

gWindow = NULL;

//Quit SDL subsystems

SDL_Quit();

}

int main()

{

//Start up SDL and create window

if( !init() )

{

printf( "Failed to initialize!\n" );

}

else

{

//Load media

if( !loadMedia() )

{

printf( "Failed to load media!\n" );

}

else

{

//Main loop flag

bool quit = false;

//Event handler

SDL_Event e;

//While application is running

while( !quit )

{

//Handle events on queue

while( SDL_PollEvent( &e ) != 0 )

{

//User requests quit

if( e.type == SDL_QUIT )

{

quit = true;

}

}

//Apply the image

SDL_BlitSurface( gXOut, NULL, gScreenSurface, NULL );

//Update the surface

SDL_UpdateWindowSurface( gWindow );

}

}

}

//Free resources and close SDL

close();

return 0;

}

I compiled this with:

clang++ -std=c++20 -g -fsanitize=address -Wall -Wextra -Wpedantic -Werror -I/usr/include/SDL2 -lSDL2main -lSDL2 testsdl2.cxx -o vimbin

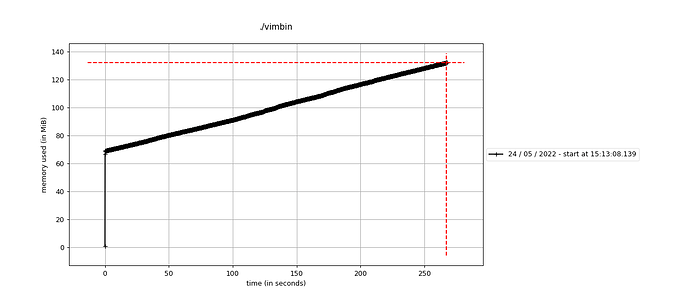

Running the binary shows some memory leaks. Heard that address sanitizer sometimes produces false leaks reports so I decided to profile the memory usage of the binary. Here I am attaching the graph of memory usage:

I am using Ubuntu 20.04 LTS and I installed SDL2 by this:

sudo apt install libsdl2-dev

So what’s wrong with the program? Or is my SDL2 setup wrong? Or is it something wrong with the library itself? How can I get rid of the leaks? Just tell me if additional information is needed.