Hi everyone,

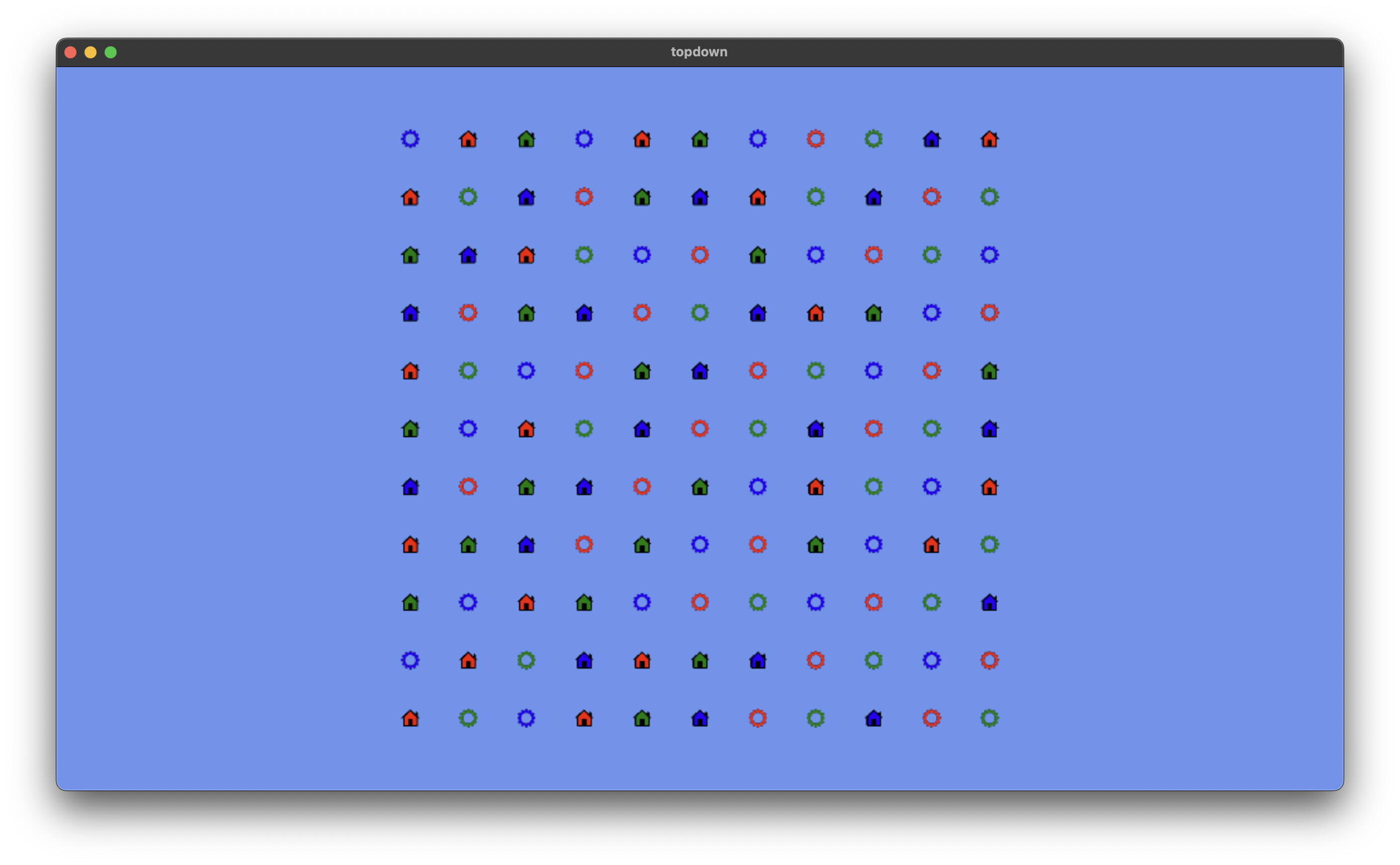

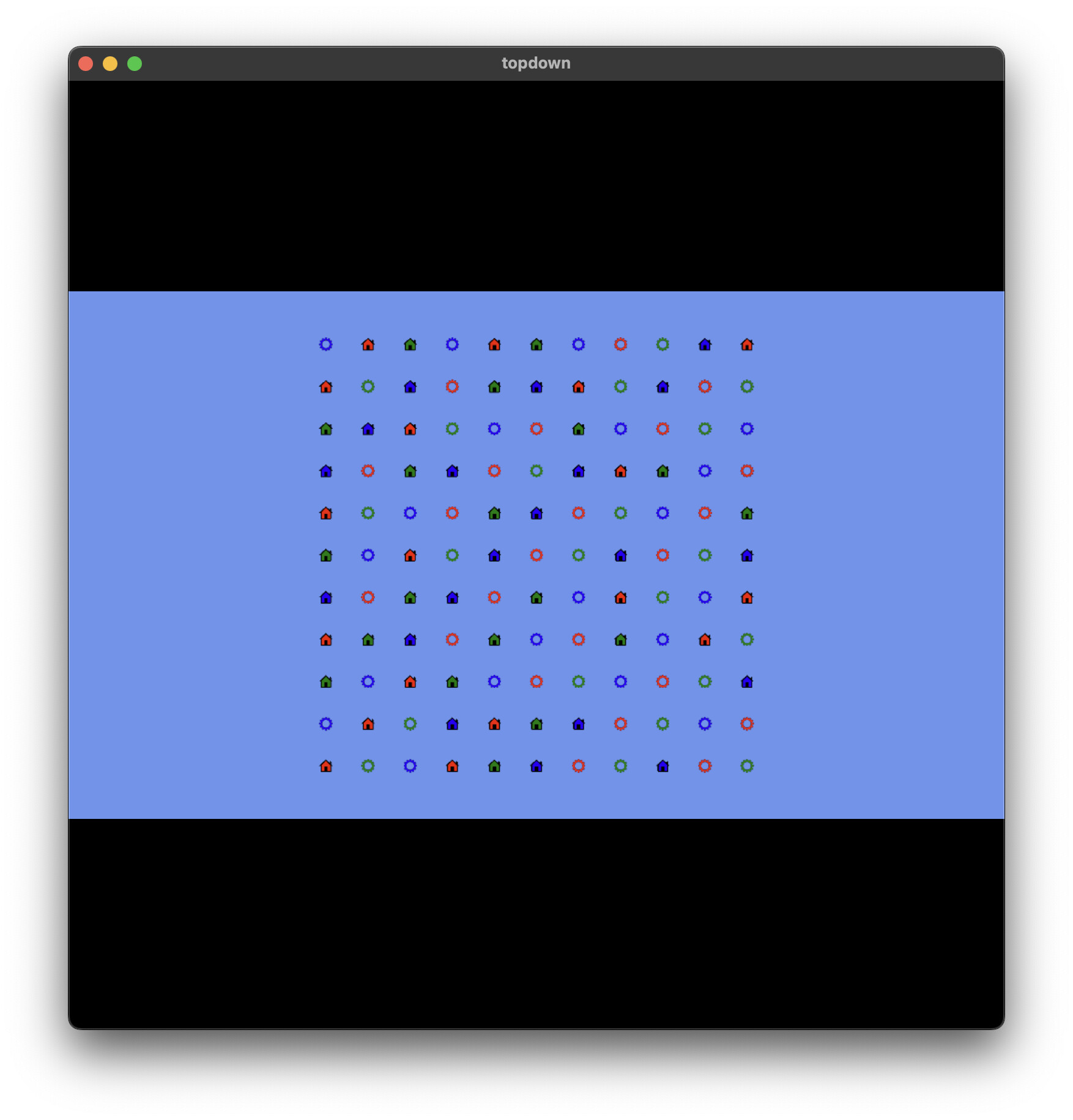

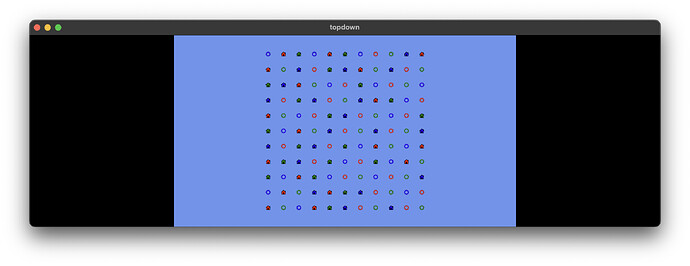

I’m struggling to understand what’s causing this issue.

In my game, rendering works like this:

- Draw the game into a low-resolution texture.

- Get the actual window size/resolution, calculate a scaling factor, and use an

SDL_GPUViewportto upscale the render target while keeping it centered. - Draw the render target to the window’s swapchain texture.

This setup was working perfectly until I installed Windows 11 on my machine a few days ago. Now, all my sprites look like the images attached to this topic.

Interestingly, I tested the same executable on another machine with a different Nvidia GPU but the same drivers—running Windows 10—and everything renders perfectly.

I’ve read about potential DPI-related issues that might be causing this, but none of the solutions I’ve tried have worked so far. I couldn’t find any related topics on this exact issue, so apologies if I missed something.

Another thing that is worth mentioning (I think) is than when taking frame captures with RenderDoc the frame appears perfect without the corrupt looking textures.

Any insights would be greatly appreciated.

Thanks in advance!