My requirement is to display multiple video frames simultaneously in one screen, for example, dividing a screen into four parts and displaying different video frames. I only do regional rendering, only rendering the upper left corner area, without affecting other frames.

I implemented this feature using SDL2, but it doesn’t work with SDL3. Is there any solution in SDL3?

You always need to redraw the whole screen each time before calling SDL_RenderPresent. This was true already in SDL2.

SDL2/SDL_RenderPresent - SDL2 Wiki

The backbuffer should be considered invalidated after each present; do not assume that previous contents will exist between frames.

If you want to “cache” the graphics and be able to update only the parts that you want then you could create a texture with SDL_TEXTUREACCESS_TARGET and draw to that instead. Then you just draw that texture to the screen each time.

SDL_Texture* texture = SDL_CreateTexture(renderer, SDL_PIXELFORMAT_RGBA8888, SDL_TEXTUREACCESS_TARGET, 600, 400);

...

// Draw to texture

SDL_SetRenderTarget(renderer, texture);

SDL_SetRenderDrawColor(renderer, 0, 0, 255, SDL_ALPHA_OPAQUE);

SDL_FRect rect = {10, 10, 50, 75};

...

// Update screen

SDL_SetRenderTarget(renderer, NULL);

SDL_SetRenderDrawColor(renderer, 127, 127, 127, SDL_ALPHA_OPAQUE);

SDL_RenderClear(renderer);

SDL_FRect dstrect = {0, 0, 600, 400};

SDL_RenderTexture(renderer, texture, NULL, &dstrect);

SDL_RenderPresent(renderer);

If you want to restrict the drawing to a certain area so that drawing outside it does not affect the rest of the screen you could use multiple textures, or use SDL_SetRenderViewport or SDL_SetRenderClipRect.

I’m not sure what the problem is, why you can’t render it to 1 of the 4 parts. Would SDL_SetRenderClipRect help? It allows you to specify a rect and everything outside of it does not get render.

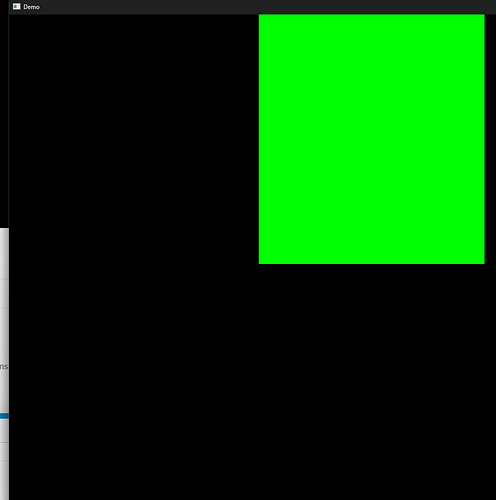

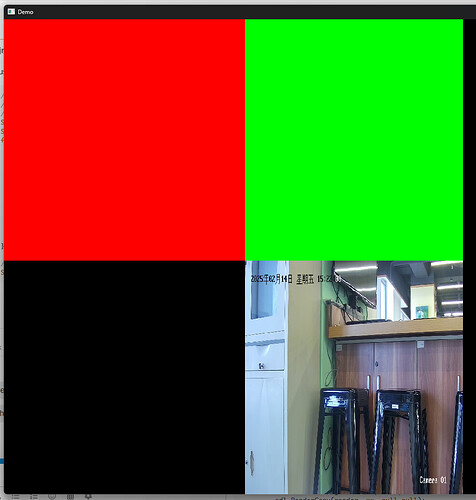

Look at this screenshot, this is an sdl form. I have divided it into four display areas, one of which is the displayed video image.

When I use sdl2, I just need to call RenderCopy (render, texture, null, rect) and then RenderPresent() to update the image in the upper left corner without affecting the image already drawn elsewhere.

But when I was on sdl3, I found that doing so would cause the images in other places to disappear.

Yes, I saw the instructions on the official website, but in fact, sdl2 retains the image from the previous frame. Because part of the raw data for my actual needs is the border drawn by RBG, and the other part is the YuV data decoded by ffmpeg. I couldn’t standardize their format when creating the texture.

If I want to create a texture in bgra8888 format, I can only use ffmpeg to convert yuv data to bgra8888, but this conversion will consume a lot of CPU performance.

You are relying on undefined behavior. The operating system or GPU driver could change this and your app would no longer function correctly.

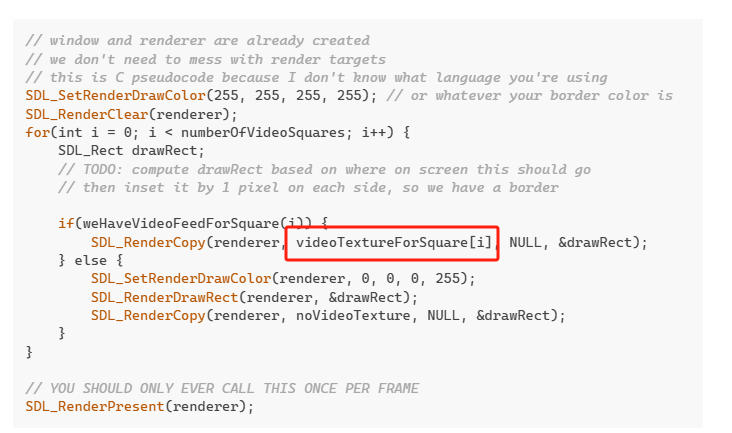

Each video stream should be a separate texture, and if there’s no camera/video then just draw the No Video graphic instead. Your textures don’t all have to be the same pixel format!

The border doesn’t even need to be a texture at all. Just set the clear color to your “border” color, clear the screen, and draw the video feeds with a one or two pixel inset. Or use SDL’s line drawing to draw the borders.

Fine,I’ll try to do the caching myself

What caching?

A simple case like this takes under 10 rendering calls for the entire frame. Clear the screen, draw each video feed with a 2 pixel inset (so you have a border) or draw the No Video graphic, and then call SDL_RenderPresent().

You only have to update each video feed texture when you have a new frame for it. You can skip rendering the entire screen if you don’t have any new video frames to show!

Sorry, could you give me a small example code? I’m a bit confused…

Sdl sdl = Sdl.GetApi();

int z = sdl.Init(Sdl.InitVideo);

Window* Window = sdl.CreateWindowFrom((void*)dis.Handle);

Renderer* Renderer = sdl.CreateRenderer(Window, -1, (uint)RendererFlags.Accelerated);

var texture = sdl.CreateTexture(Renderer, (uint)PixelFormatEnum.Bgra8888, (int)TextureAccess.Target, (int)dis.Bounds.Width, (int)dis.Bounds.Height);

sdl.SetRenderTarget(Renderer, texture);

sdl.SetRenderDrawColor(Renderer, 255, 0, 0, 255);

sdl.RenderFillRect(Renderer, new Rectangle<int>(500, 500, 500, 500));

sdl.SetRenderTarget(Renderer, null);

sdl.RenderCopy(Renderer, texture, null, null);

sdl.RenderPresent(Renderer);

sdl.SetRenderTarget(Renderer, texture);

sdl.SetRenderDrawColor(Renderer, 0, 255, 0, 255);

sdl.RenderFillRect(Renderer, new Rectangle<int>(0, 500, 500, 500));

sdl.RenderCopy(Renderer, texture, null, null);

sdl.SetRenderTarget(Renderer, null);

sdl.RenderPresent(Renderer);

Is this written incorrectly? He drew a black screen for me. I want a rectangle on both the top left and bottom right.

//SDl播放

Sdl sdl = Sdl.GetApi();

int z = sdl.Init(Sdl.InitVideo);

Window* Window = sdl.CreateWindowFrom((void*)dis.Handle);

Renderer* Renderer = sdl.CreateRenderer(Window, -1, (uint)RendererFlags.Accelerated);

var r1 = new Rectangle<int>(0, 0, 500, 500);

sdl.SetRenderDrawColor(Renderer, 255, 0, 0, 255);

sdl.RenderFillRect(Renderer, r1);

sdl.RenderPresent(Renderer);

var r2 = new Rectangle<int>(500, 0, 500, 500);

sdl.SetRenderDrawColor(Renderer, 0, 255, 0, 255);

sdl.RenderFillRect(Renderer, r2);

sdl.RenderPresent(Renderer);

If written this way, the result will become this again

Just something like

// window and renderer are already created

// we don't need to mess with render targets

// this is C pseudocode because I don't know what language you're using

SDL_SetRenderDrawColor(255, 255, 255, 255); // or whatever your border color is

SDL_RenderClear(renderer);

for(int i = 0; i < numberOfVideoSquares; i++) {

SDL_Rect drawRect;

// TODO: compute drawRect based on where on screen this should go

// then inset it by 1 pixel on each side, so we have a border

if(weHaveVideoFeedForSquare(i)) {

SDL_RenderCopy(renderer, videoTextureForSquare[i], NULL, &drawRect);

} else {

SDL_SetRenderDrawColor(renderer, 0, 0, 0, 255);

SDL_RenderDrawRect(renderer, &drawRect);

SDL_RenderCopy(renderer, noVideoTexture, NULL, &drawRect);

}

}

// YOU SHOULD ONLY EVER CALL THIS ONCE PER FRAME

SDL_RenderPresent(renderer);

I’ve figured it out. Your method requires frame synchronization because I have decoded images from more than one camera, and each camera’s 25 frames per second is not synchronized. I solved this problem with rendertarget.

//SDl播放

Sdl sdl = Sdl.GetApi();

int z = sdl.Init(Sdl.InitVideo);

Window* Window = sdl.CreateWindowFrom((void*)dis.Handle);

Renderer* Renderer = sdl.CreateRenderer(Window, -1, (uint)RendererFlags.Accelerated);

var texture = sdl.CreateTexture(Renderer, (uint)PixelFormatEnum.Bgra8888, (int)TextureAccess.Target, (int)dis.Bounds.Width, (int)dis.Bounds.Height);

sdl.SetRenderTarget(Renderer, texture);

var r1 = new Rectangle<int>(0, 0, 500, 500);

sdl.SetRenderDrawColor(Renderer, 255, 0, 0, 255);

sdl.RenderFillRect(Renderer, r1);

sdl.SetRenderTarget(Renderer, null);

sdl.RenderCopy(Renderer, texture, null, null);

sdl.RenderPresent(Renderer);

sdl.SetRenderTarget(Renderer, texture);

var r2 = new Rectangle<int>(500, 0, 500, 500);

sdl.SetRenderDrawColor(Renderer, 0, 255, 0, 255);

sdl.RenderFillRect(Renderer, r2);

sdl.SetRenderTarget(Renderer, null);

sdl.RenderCopy(Renderer, texture, null, null);

sdl.RenderPresent(Renderer);

sdl.SetRenderTarget(Renderer, texture);

Here is the code to decode each frame。

Texture* texture = sdl.CreateTexture(render, (uint)PixelFormatEnum.Iyuv, (int)TextureAccess.Streaming, 1920, 1080);

while (ffmpeg.av_read_frame(fctx1, &packet) >= 0)

{

if (packet.stream_index == 0)

{

ret = ffmpeg.avcodec_send_packet(codec_ctx, &packet);

if (ret < 0)

{

}

ret = ffmpeg.avcodec_receive_frame(codec_ctx, frame);

if (ret < 0)

{

}

var xx = sdl.GetRenderTarget(render);

sdl.UpdateYUVTexture(texture, null, frame->data[0], frame->linesize[0], frame->data[1], frame->linesize[1], frame->data[2], frame->linesize[2]);

var r3 = new Rectangle<int>(500, 500, 500, 500);

sdl.RenderCopy(render, texture, null, r3);

sdl.SetRenderTarget(render,null);

sdl.RenderCopy(render, xx, null,null);

sdl.RenderPresent(render);

sdl.SetRenderTarget(render, xx);

}

}

Thank you very much ![]()

So, again, you’re calling SDL_RenderPresent() multiple times per frame, which you don’t want to do.

And there’s no synchronization required. Update each camera’s texture whenever that video feed has a new frame. If you don’t then it just draws the video frame that’s already in there.

Using render targets is literally doing the same thing but with unnecessary extra steps.

My requirement is to display a maximum of 25 cameras in one window, with each camera displaying 25 frames per second. Each rendering takes about 1ms, and drawing each frame once is sufficient to meet my performance requirements. If there are more than 40 cameras, you will need your method of frame synchronization.

?,I don’t quite understand, I called it once every frame

You should only call it once per screen frame, after all draw calls have been made and you want everything to be shown on the screen.

Your example code has multiple calls to SDL_RenderPresent(). You should have only one, when everything is finished for the current screen frame and you’re ready to show it on screen to the user.