Thank you very much for your answer。

I know what a target texture is. This is an absolutely bizarre way to implement a camera feed viewer application.

You don’t seem to understand that you’re supposed to redraw the whole screen every time. And then only call SDL_RenderPresent() when you’re done.

Also, if you’re worried about speed, creating a new texture for every camera on every loop iteration, copying the video frame into it, rendering it to a target texture, then rendering the target texture is gonna be way slower than my way.

I think I understand now. I will use C # to write a more complete demo over the weekend, and I will put it here when the time comes. If you are interested, you can take a look at my implementation logic and we can discuss it again.

It’s too bad I don’t check this site frequently. It looks like you understand what he means now but I’ll say it in a different way.

He’s suggesting you call SDL_RenderPresent at most 1 time per frame. Maybe your cameras update 25 or 30 times a second and the monitor is 60hz, you may want to draw all your cameras, call SDL_RenderPresent then sleep until the next time you want to draw (1000/60 or 1000/30). I don’t use sleep/SDL_Delay I use vsync then process all the events (usually keyboard inputs but my code response to mouse movement)

In short, don’t draw one camera, draw all the cameras then call SDL_RenderPresent

Oh also, I could be wrong but calling SDL_RenderPresent multiple times doesn’t mean there will be drawing. I think I read that it tells SDL how to draw the next frame but if you draw another frame quickly, before the gpu is ready to draw, the new frame will replace it. At least that’s what I assume when I read there’s double and triple buffering

What you’re referring to is “mailbox” presentation mode. It only works for completed frames. Basically, if you have a frame being shown on screen, a second frame finished and waiting, and then a third frame is finished before the second frame is shown, the second frame gets dropped and the third frame is shown instead. It isn’t available on every operating system (such as macOS) and it’s something applications have to check for and specifically request. Otherwise, the frames get shown in the order they’re rendered, even with triple buffering.

@Levo With vsync turned off you can replace the previous frame before it is shown but this could lead to tearing if the frame happens to be replaced while the screen is updated.

Are you sure? Doesn’t double buffering solve that?

I wrote a test a few months ago to see how many frames SDL render one my desktop compared to my other device. I started with too little work and it was doing >2K frames per second. What’s happening there? I don’t think it was allocating enough memory to store several thousand frames of work

It doesn’t store several thousand frames. You probably just had vsync turned off. So if one frame is in the process of being sent to the monitor when another frame is finished rendering, it starts sending the second frame instead of waiting for the first frame to finish being sent. It’s why turning off vsync can result in visible tearing.

Double buffering just means the rendering is done to an off screen buffer. You have the buffer that’s being shown, and then a second buffer that the rendering is done in, hence “double buffer”.

@sjr

niuzhaosen/Avalonia-SDL3-FFmpegDemo: Avalonia+SDL3+FFmpegDemo

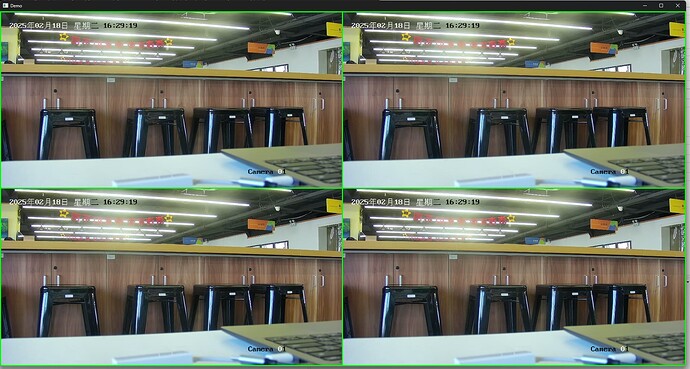

This is the demo mentioned last time, sorry for the delay caused by some things。

Do you think my understanding of usage and code logic is correct?

Another issue is that ffmpeg can be hardware accelerated. The decoded data exists in the video memory. If I want to display it, I need to copy the data from the video memory to the memory and then use sdl to render and draw it. This data copying operation consumes a lot of performance. Is there any API in sdl that can directly render data in video memory?

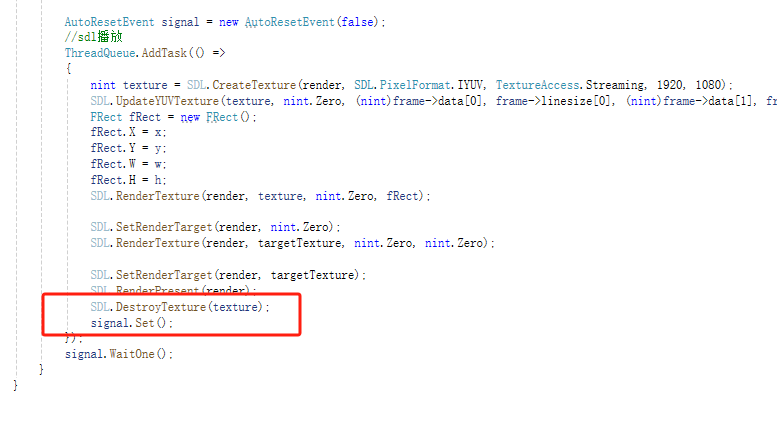

So, again, you should not be calling SDL_RenderPresent() for every camera. Draw everything, and only once all drawing is done and you’re ready to present it to the user should you call SDL_RenderPresent(). You should only be calling it once per loop iteration!

SDL3 has its own camera API. If it does what you need (I don’t know if it can fetch video over a network) then it’s probably going to be easier to use that since you won’t need FFMPEG.

There’s no zero-copy way to get video frames from FFMPEG to an SDL texture.

I don’t create a ffmpeg context for every frame, I create one for each camera

Sorry, somehow I didn’t realize my first glance through your code that you were creating a separate thread for every camera.

There actually is a way to get zero copy video frames to an SDL texture:

Oh, thank you. I’m just looking for this !

Crying,The existing c # binding of sdl3 does not have the condition to directly connect with the dx11 library, and many structures have not been implemented.