Hi, I’m trying for the first time to write my own waveform (a simple square with some ramping) and send it to my audio device, but running into some confounding problems at the very last stage of the process.

Context: I am on a little endian system, using 16 bit unsigned samples, which is what my device seems to want. So the zero amplitude of each sample should be 65535 / 2.

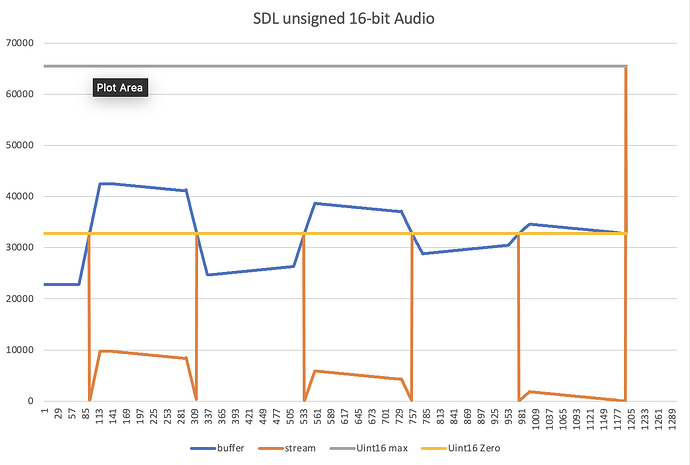

In my callback function, the samples I’m copying from to write to the stream are fine. I’ve printed and graphed them to confirm this. However, something goes wrong when I write them to the stream – or, depending on method, the problem occurs after I do the copy to the stream, but before my device plays them.

I’ve tried two methods of writing my samples to the stream: SDL_MixAudioFormat, and a simple memcpy. While the distortion is only visible in the stream waveform after calling SDL_MixAudioFormat, and presents no visual problem when using memcpy, the same distortions are still audible in the memcpy’d audio. I tested this by reducing the amplitude of the input signal to almost nothing, which had no effect on the amplitude (volume) of the output signal, even though the values in stream still show a small waveform.

This is all quite hard to articulate, so here’s an illustration:

The blue line is a fragment of my input waveform, which looks exactly as I expect. The orange line is the waveform produced by printing unsigned 16bit values directly from the stream after copying over my waveform with SDL_MixAudioFormat. I’ve confirmed that the format value I’m passing to that function specifies 16-bit, unsigned, little-endian samples.

Here’s my whole callback function, where you can see exactly how I’m printing the values that generate these waveforms:

int callback_i = 0; // for graphing

void audio_callback(void* userdata, Uint8* stream, int streamLength)

{

AudioData* audio = (AudioData*)userdata;

if(audio->length == 0) {

return;

}

Uint32 length = (Uint32) streamLength;

length = (length > audio->length ? audio->length : length);

Uint16 zero_amp = 65535 / 2;

for (int i=0; i<streamLength-1; i+=2) {

memcpy(&(stream[i]), &zero_amp, 2);

}

printf("\nFormat Details => isSigned: %d, bitSize: %d, isBigEndian: %d", (Uint8) SDL_AUDIO_ISSIGNED(audio->format), (Uint8) SDL_AUDIO_BITSIZE(audio->format), (Uint8) SDL_AUDIO_ISBIGENDIAN(audio->format));

/* Method 1: memcpy. Printed waveform from stream looks fine, but the audio still sounds clearly incorrect. */

/* memcpy(stream, audio->pos, length); */

/* Method 2: SDL_MixAudioFormat. Problems are visible in the printed waveform. */

SDL_MixAudioFormat(stream, audio->pos, audio->format, length, 128);

/* Graphing data */

if (callback_i < 2000) {

FILE* f1;

FILE* f2;

f2 = fopen("callback_origin.txt", "w");

f1 = fopen("callback_waveform.txt", "w");

for (int i=0; i<length; i+=2) {

Uint16 sample_origin = 0;

Uint16 sample = 0;

memcpy(&sample, &(stream[i]), 2);

memcpy(&sample_origin, &((audio->pos)[i]), 2);

fprintf(f1, "%d\n", sample);

fprintf(f2, "%d\n", sample_origin);

}

callback_i += 1;

}

audio->pos += length;

audio->length -= length;

}

What am I misunderstanding or doing wrong? Any help would be tremendously appreciated!

EDIT:

Passing AUDIO_U16LSB explicitly to SDL_MixAudioFormat actually fixes the appearance of the orange waveform. However, the audio I’m hearing is very loud (and also, I think, distorted in a few other ways) regardless of how small I make the input signal amplitude. This is the same problem I have with memcpy. I had thought earlier on that this was due to some automatic normalization that SDL does behind the scenes, but it seems more likely that the waveform is getting distorted based on some format misunderstanding or disagreement somewhere.