#include "Shader.h"

void Shader::init(SDL_Renderer* renderer) {

glCreateShader = (PFNGLCREATESHADERPROC)SDL_GL_GetProcAddress("glCreateShader");

glShaderSource = (PFNGLSHADERSOURCEPROC)SDL_GL_GetProcAddress("glShaderSource");

glCompileShader = (PFNGLCOMPILESHADERPROC)SDL_GL_GetProcAddress("glCompileShader");

glGetShaderiv = (PFNGLGETSHADERIVPROC)SDL_GL_GetProcAddress("glGetShaderiv");

glGetShaderInfoLog = (PFNGLGETSHADERINFOLOGPROC)SDL_GL_GetProcAddress("glGetShaderInfoLog");

glDeleteShader = (PFNGLDELETESHADERPROC)SDL_GL_GetProcAddress("glDeleteShader");

glAttachShader = (PFNGLATTACHSHADERPROC)SDL_GL_GetProcAddress("glAttachShader");

glCreateProgram = (PFNGLCREATEPROGRAMPROC)SDL_GL_GetProcAddress("glCreateProgram");

glLinkProgram = (PFNGLLINKPROGRAMPROC)SDL_GL_GetProcAddress("glLinkProgram");

glValidateProgram = (PFNGLVALIDATEPROGRAMPROC)SDL_GL_GetProcAddress("glValidateProgram");

glGetProgramiv = (PFNGLGETPROGRAMIVPROC)SDL_GL_GetProcAddress("glGetProgramiv");

glGetProgramInfoLog = (PFNGLGETPROGRAMINFOLOGPROC)SDL_GL_GetProcAddress("glGetProgramInfoLog");

glUseProgram = (PFNGLUSEPROGRAMPROC)SDL_GL_GetProcAddress("glUseProgram");

glUniform1i = (PFNGLUNIFORM1IPROC)SDL_GL_GetProcAddress("glUniform1i");

glUniform1f = (PFNGLUNIFORM1FPROC)SDL_GL_GetProcAddress("glUniform1f");

glGetUniformLocation = (PFNGLGETUNIFORMLOCATIONPROC)SDL_GL_GetProcAddress("glGetUniformLocation");

glActiveTexture = (PFNGLACTIVETEXTUREPROC)SDL_GL_GetProcAddress("glActiveTexture");

glTexStorage3D = (PFNGLTEXSTORAGE3DPROC)SDL_GL_GetProcAddress("glTexStorage3D");

lut = IMG_LoadTexture(renderer, "data/shader/image/lut.png");

light = IMG_LoadTexture(renderer, "data/shader/image/light.png");

border = IMG_LoadTexture(renderer, "data/shader/image/border.png");

sunlight = IMG_LoadTexture(renderer, "data/shader/image/sunlight.png");

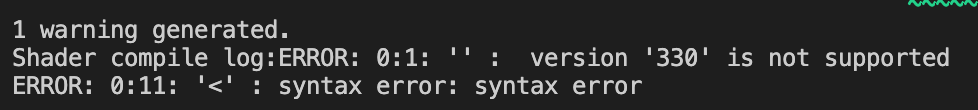

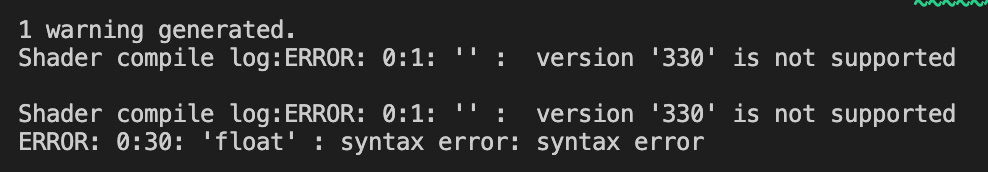

loadShader("data/shader/shader.vs", "data/shader/1.fs");

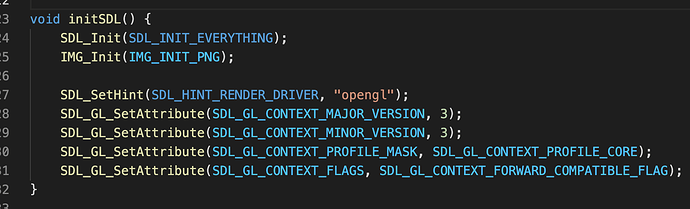

SDL_GL_SetAttribute(SDL_GL_CONTEXT_MAJOR_VERSION, 3);

SDL_GL_SetAttribute(SDL_GL_CONTEXT_MINOR_VERSION, 3);

SDL_GL_SetAttribute(SDL_GL_CONTEXT_PROFILE_MASK, SDL_GL_CONTEXT_PROFILE_CORE);

SDL_GL_SetAttribute(SDL_GL_CONTEXT_FLAGS, SDL_GL_CONTEXT_FORWARD_COMPATIBLE_FLAG);

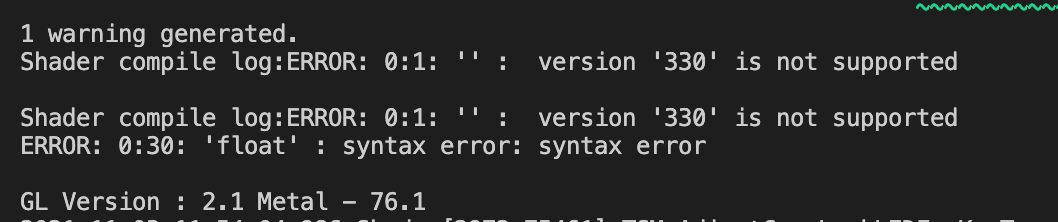

std::cout << "GL Version : " << (const char*)glGetString(GL_VERSION) << std::endl;

shaderBuffer = SDL_CreateTexture(renderer, SDL_PIXELFORMAT_RGBA8888, SDL_TEXTUREACCESS_TARGET, 1920, 1080);

}

void Shader::loadShader(std::string vtxFile, std::string fragFile) {

GLuint vtxShaderId, fragShaderId;

programId = glCreateProgram();

std::ifstream f(vtxFile);

std::string source((std::istreambuf_iterator<char>(f)),

std::istreambuf_iterator<char>());

vtxShaderId = compileShader(source.c_str(), GL_VERTEX_SHADER);

f = std::ifstream(fragFile);

source = std::string((std::istreambuf_iterator<char>(f)),

std::istreambuf_iterator<char>());

fragShaderId = compileShader(source.c_str(), GL_FRAGMENT_SHADER);

if (vtxShaderId && fragShaderId) {

// Associate shader with program

glAttachShader(programId, vtxShaderId);

glAttachShader(programId, fragShaderId);

glLinkProgram(programId);

glValidateProgram(programId);

// Check the status of the compile/link

GLint logLen;

glGetProgramiv(programId, GL_INFO_LOG_LENGTH, &logLen);

if (logLen > 0) {

char* log = (char*)malloc(logLen * sizeof(char));

// Show any errors as appropriate

glGetProgramInfoLog(programId, logLen, &logLen, log);

std::cout << "Prog Info Log: " << std::endl << log << std::endl;

free(log);

}

}

if (vtxShaderId) {

glDeleteShader(vtxShaderId);

}

if (fragShaderId) {

glDeleteShader(fragShaderId);

}

}

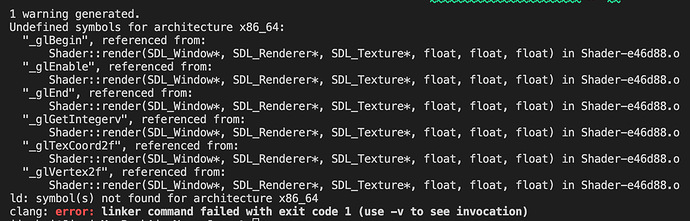

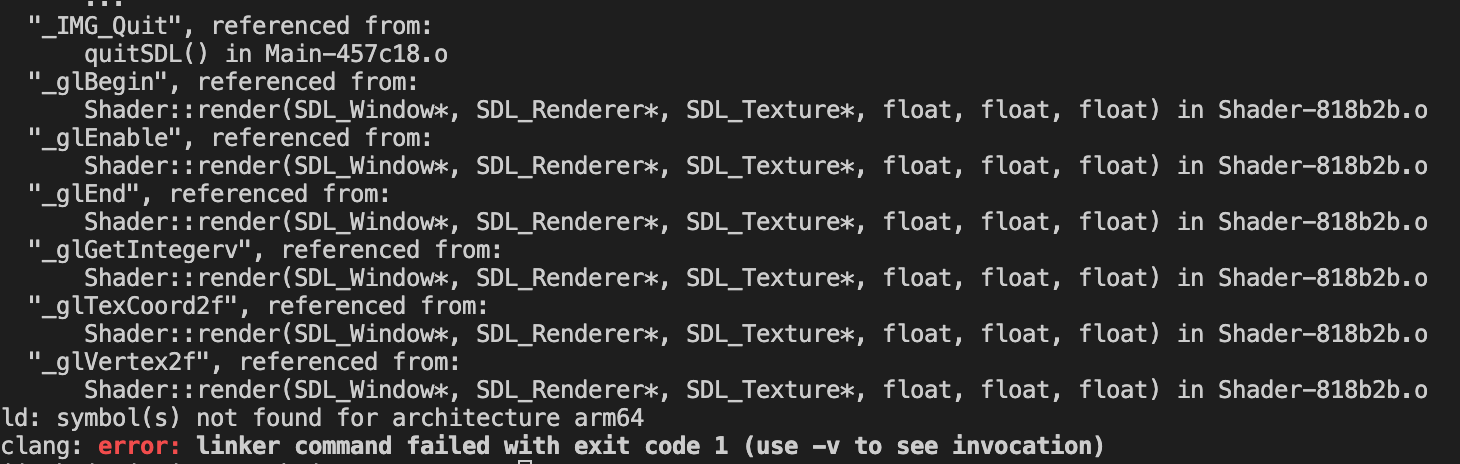

void Shader::render(SDL_Window* window, SDL_Renderer* renderer, SDL_Texture* buffer, float cameraY, float greyScaleAmount, float transition) {

GLint oldProgramId;

SDL_SetRenderTarget(renderer, shaderBuffer);

//SDL_SetRenderTarget(renderer, NULL);

if (programId != 0) {

glGetIntegerv(GL_CURRENT_PROGRAM, &oldProgramId);

glUseProgram(programId);

}

glEnable(GL_TEXTURE_2D);

glActiveTexture(GL_TEXTURE0);

SDL_GL_BindTexture(buffer, NULL, NULL);

glUniform1i(glGetUniformLocation(programId, "texture0"), 0);

glActiveTexture(GL_TEXTURE1);

SDL_GL_BindTexture(lut, NULL, NULL);

glUniform1i(glGetUniformLocation(programId, "lut"), 1);

glActiveTexture(GL_TEXTURE2);

SDL_GL_BindTexture(light, NULL, NULL);

glUniform1i(glGetUniformLocation(programId, "light"), 2);

glActiveTexture(GL_TEXTURE3);

SDL_GL_BindTexture(border, NULL, NULL);

glUniform1i(glGetUniformLocation(programId, "border"), 3);

glActiveTexture(GL_TEXTURE4);

SDL_GL_BindTexture(sunlight, NULL, NULL);

glUniform1i(glGetUniformLocation(programId, "sunlight"), 4);

glUniform1f(glGetUniformLocation(programId, "cameraY"), cameraY);

glUniform1f(glGetUniformLocation(programId, "greyScaleAmount"), greyScaleAmount);

glUniform1f(glGetUniformLocation(programId, "progress"), transition);

GLfloat minx, miny, maxx, maxy;

GLfloat minu, maxu, minv, maxv;

// Coordenadas de la ventana donde pintar.

minx = 0.0f;

miny = 0.0f;

maxx = 1920;

maxy = 1080;

minu = 0.0f;

maxu = 1.0f;

minv = 0.0f;

maxv = 1.0f;

glBegin(GL_TRIANGLE_STRIP);

glTexCoord2f(minu, minv);

glVertex2f(minx, miny);

glTexCoord2f(maxu, minv);

glVertex2f(maxx, miny);

glTexCoord2f(minu, maxv);

glVertex2f(minx, maxy);

glTexCoord2f(maxu, maxv);

glVertex2f(maxx, maxy);

glEnd();

SDL_GL_SwapWindow(window);

SDL_GL_UnbindTexture(buffer);

SDL_GL_UnbindTexture(lut);

if (programId != 0) {

glUseProgram(oldProgramId);

}

SDL_SetRenderTarget(renderer, NULL);

drect = { 0, 0, 1920, 1080 };

SDL_RenderCopy(renderer, shaderBuffer, NULL, &drect);

}

GLuint Shader::compileShader(const char* source, GLuint shaderType) {

// Create ID for shader

GLuint result = glCreateShader(shaderType);

// Define shader text

glShaderSource(result, 1, &source, NULL);

// Compile shader

glCompileShader(result);

//Check vertex shader for errors

GLint shaderCompiled = GL_FALSE;

glGetShaderiv(result, GL_COMPILE_STATUS, &shaderCompiled);

if (shaderCompiled != GL_TRUE) {

GLint logLength;

glGetShaderiv(result, GL_INFO_LOG_LENGTH, &logLength);

if (logLength > 0)

{

GLchar* log = (GLchar*)malloc(logLength);

glGetShaderInfoLog(result, logLength, &logLength, log);

std::cout << "Shader compile log:" << log << std::endl;

free(log);

}

glDeleteShader(result);

result = 0;

}

return result;

}

I know it’s such a mean thing to post a whole code here, but…

I think I have no choice other than this. If you have some time on you, and you check my code? I have those SDL_GL_SetAttribute in the main code.