Instead of starting a new thread, I want to add that this highlights a weird problem imo

Context: I am making my own 2D game engine and using that engine to make an editor for the engines data (that makes sense right?). My engine supports scaling objects and this is where src float precision is critical.

TL;DR I solved my element scaling rendering issue by changing the src rect to SDL_FRect and doing minor refactors inside the SDL functions and highly recommend allowing this.

I have a core component called a WindowElement that can position and size itself either by anchors or positions.

Part of this hierarchy is a feature that allows me to mark a window element as a mask. This is super useful for making a sub-window that can scroll for example.

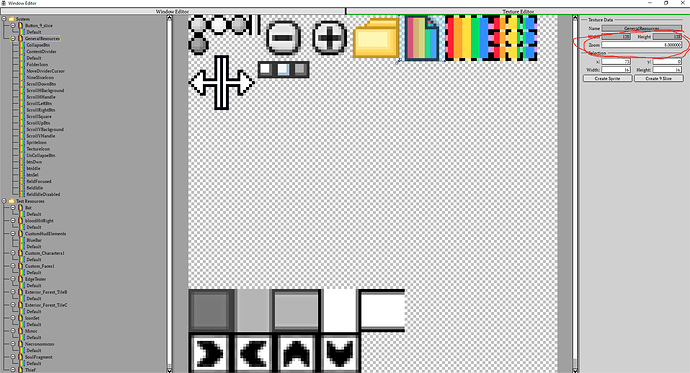

The issue came with my Texture editor with the goal to be able to specify the dimensions of the texture to use for sprites. So for example:

<Sprite Name=“Default” xPos=“0” yPos=“0” width=“128” height=“128”/>

<Sprite Name=“MoveDividerCursor” xPos=“0” yPos=“7” width=“23” height=“19”/>

the default sprite is created for every texture and represents a using the full texture.

No here’s where stuff gets funky XD

selecting sprite rects with pixel accuracy is not practical without zooming. So I added zooming. Which means my engine supports the idea of scaling an image by a %

My RenderableWindowElement sub class automatically calculates the src and dst rects and in this case applies simple logic when an element is scaled to grab the correct src rect. However, when scrolling, I would be moving the dst rect by sub pixels (which is intended behavior) or the src texture.

Imagine within my masked area, with a texture being zoomed 8x, my scroll is 2 pixels from the top left.

In reality I am stretching the src texture over a bigger destination area. Applying float to the src (it’s one of the advantages of UVs being floats for example). It straightforwardly tells the renderer hey, 6 pixels are black in this stretch instead of forcing that 8 pixel conversion.

Anyhoo, the change was relatively easy to do and the results were super helpful. Honestly, if you look at the SDL_RenderCopyF implementation:

In the case of not using rendergeometry (Surface blitting) its as simple as injecting the float values converted to int.

In the geometry case, the src rect is actually lost past this function as its used to generated the uv’s and xy’s which are floats. It actually removes the need to float casting the ints of the src rect and the renderers automatically incorporated.

Disclaimer: My solution only works when rendering using geometry and SDL_Surface rendering to SDL_Surface won’t work but I can honestly how no issue with that so far.