I encountered a rendering bug when using SDL_RenderCopy to render part of the texture.

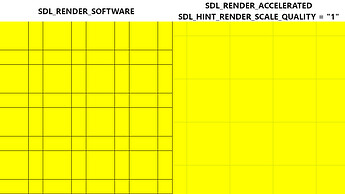

When using SDL_RenderSetLogicalSize different from the actual size of the displayed area, and if SDL_RENDER_SOFTWARE is used or SDL_RENDER_ACCELERATED is used with SDL_HINT_RENDER_SCALE_QUALITY = "1" or better, the displayed area shows lines formed from neighboring pixels of the texture part.

The issue doesn’t appear with SDL_RENDER_ACCELERATED and SDL_HINT_RENDER_SCALE_QUALITY = "0", but this emits “ugly” rendering when rendering complicated graphics (e.g. vertical lines).

I currently have 3 workarounds for this:

- Use a texture buffer, in which the desired part of the texture is copied first, and then this buffer is rendered.

- Transfer each desired part of the texture into a separate texture.

- Split each texture part with 1px transparent lines.

My usecase is sprites, but I don’t really like these three ways, I’m interested to hear what other people think.

Minimal example:

#include <SDL.h>

#include <assert.h>

#define SUBSYSTEMS SDL_INIT_VIDEO | SDL_INIT_EVENTS | SDL_INIT_TIMER

#define WINDOW_FLAGS 0

#ifdef RENDERER_SOFTWARE

# define RENDERER_FLAGS SDL_RENDERER_SOFTWARE

#else

# define RENDERER_FLAGS SDL_RENDERER_ACCELERATED

# define RENDER_SCALE_QUALITY "1"

#endif

#define WINODW_W 512

#define WINODW_H 512

#define RENDER_W 768

#define RENDER_H 768

#define OBJECT_SIZE 64

#define COLS (RENDER_W / OBJECT_SIZE)

#define ROWS (RENDER_H / OBJECT_SIZE)

int main(int argc, char **argv) {

(void)argc;

(void)argv;

int rc;

(void)rc;

rc = SDL_InitSubSystem(SUBSYSTEMS);

assert(rc == 0);

#ifndef RENDERER_SOFTWARE

SDL_SetHint(SDL_HINT_RENDER_SCALE_QUALITY, RENDER_SCALE_QUALITY);

#endif

SDL_Window *window = SDL_CreateWindow("test", SDL_WINDOWPOS_CENTERED, SDL_WINDOWPOS_CENTERED, WINODW_W, WINODW_H, WINDOW_FLAGS);

assert(window != NULL);

SDL_Renderer *renderer = SDL_CreateRenderer(window, -1, RENDERER_FLAGS);

assert(renderer != NULL);

rc = SDL_RenderSetLogicalSize(renderer, RENDER_W, RENDER_H);

assert(rc == 0);

SDL_Surface *spritesheet_surface = SDL_LoadBMP("spritesheet.bmp");

assert(spritesheet_surface != NULL);

SDL_Texture *spritesheet = SDL_CreateTextureFromSurface(renderer, spritesheet_surface);

assert(spritesheet != NULL);

SDL_FreeSurface(spritesheet_surface);

SDL_Rect src;

SDL_Rect dst;

src.x = 0;

src.y = 0;

src.w = OBJECT_SIZE;

src.h = OBJECT_SIZE;

dst.w = OBJECT_SIZE;

dst.h = OBJECT_SIZE;

for (int x = 0; x < COLS; x++) {

for (int y = 0; y < ROWS; y++) {

dst.x = x * OBJECT_SIZE;

dst.y = y * OBJECT_SIZE;

rc = SDL_RenderCopy(renderer, spritesheet, &src, &dst);

assert(rc == 0);

}

}

SDL_RenderPresent(renderer);

SDL_Event event;

int exit = 0;

do {

while (SDL_PollEvent(&event)) {

if (event.type == SDL_QUIT) {

exit = 1;

}

}

SDL_Delay(200); // Give the CPU a break.

} while (!exit);

SDL_DestroyRenderer(renderer);

SDL_DestroyWindow(window);

SDL_QuitSubSystem(SUBSYSTEMS);

return 0;

}

I can’t upload the spritesheet to forum directly as new user, so here it is zstd-compressed and base64-encoded:

KLUv/QSIBQIAggMJEeAtBwBQEOmScrB/2VR/7Z0Cd8OETPnf95Fx+0Wwrs7+xk4JCACF3uDagQYo

sNNRmAEEOw6IAcwCV5XdMEOuaYQ=

Screenshot of both cases: