Hello all!

I recently updated my SDL versions from SDL 2.0.18 + SDL_ttf 2.0.15 to 2.0.20 and 2.0.18 respectively. I have code that builds a glyph map using TTF_RenderText_Blended for a monospaced font, then converts the surface to an OpenGL texture.

Glyph texture building code:

const std::string characters = " ! #$%&'()*+,-./0123456789:;<=>?@ABCDEFGHIJKLMNOPQRSTUVWXYZ _ abcdefghijklmnopqrstuvwxyz | ";

inline void initialize_fixed_function_text() {

// Build Glyph texture

SDL_Surface * LUTSurface = TTF_RenderText_Blended(Fonts::Get().GetFont(),

characters.c_str(),

{255, 255, 255, 255});

surface_width = LUTSurface->w;

surface_height = LUTSurface->h;

make_texture(fast_text_texture, LUTSurface->pixels, LUTSurface->w, LUTSurface->h);

TTF_SizeText(Fonts::Get().GetFont(), "A", &character_width, &character_height);

character_width_percent = float(character_width) / float(LUTSurface->w);

}

make_texture():

void make_texture(Texture &texture, void *data, unsigned long width, unsigned long height) override {

glGenTextures(1, &texture.gl_texture);

glBindTexture(GL_TEXTURE_2D, texture.gl_texture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_BGRA, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

I then build rects for each character dynamically by setting the vertex texture coordinate to a point in the glyph map.

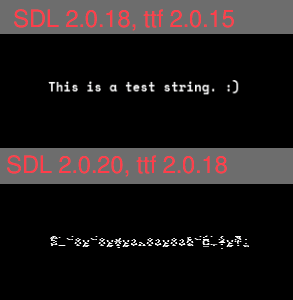

Here is the difference between the two versions.

However if I save the SDL_Surface to disk and reload with it works correctly again:

SDL_SaveBMP(LUTSurface, "/Users/joe/Library/Preferences/MiniMeters/glyphs.bmp");

LUTSurface = SDL_LoadBMP("/Users/joe/Library/Preferences/MiniMeters/glyphs.bmp");

My assumption is that the Surface format changed, but I could not find information on this in the changelog or commits.

Any help would be appreciated! Thank you!