As I noticed, the surface of SDL_LoadBMP is in BGR format. OpenGL-SE only supports RGB formats. Somehow this doesn’t fit together at all.

Is there a solution on the SDL side, or do you have to manually swap all the bytes in the data buffer?

SDL_stbimage.h (needs stb_image.h as only dependency, besides SDL of course) outputs SDL_PIXELFORMAT_RGB24 or SDL_PIXELFORMAT_RGBA32 (depending on whether there’s an alpha channel).

Though if you feed the data into OpenGL anyway, going through SDL_Surface doesn’t make much sense, you can as well use stb_image.h directly (it outputs the pixeldata as a byte buffer, in byte-wise RGB or RGBA, and is really easy to use)

Interestingly enough, the documentation in the source code seems to say that it expects to return in RGB format.

Is it possibly just this one bmp file that’s got this issue, or are all bitmaps on your system in reversed order?

Any idea if your system is big endian or little endian?

Do you mind confirming that SDL is detecting the byte order that you would expect it to:

#if (SDL_BYTEORDER == SDL_BIG_ENDIAN)

SDL_Log("Detected Byte Order is Big Endian");

#endif

#if (SDL_BYTEORDER == SDL_LIL_ENDIAN)

SDL_Log("Detected Byte Order is Little Endian”);

#endif

How are you confirming that your image is returned in BGR format?

INFO: Detected Byte Order is Little Endian

It is a completely ordinary Linux PC.

Good call, my computer is also returning SDL_PIXELFORMAT_BGR24:

#include <SDL3/SDL.h>

int main()

{

SDL_Init(SDL_INIT_VIDEO);

SDL_Surface * surf = SDL_LoadBMP("a.bmp");

SDL_Log(SDL_GetPixelFormatName(surf->format->format));

SDL_Quit();

}

If I remember correctly, this is because that is the default resolution for the window’s screen surface. Images get blitted faster if they match the screen.

I will dig deeper into the source to try to find something that might change this default loading behavior.

void printFormat(SDL_Surface *sur){

SDL_Log("format: %u", sur->format->format);

SDL_Log("bit per pixel: %u bytes per Pixel: %u", sur->format->bits_per_pixel, sur->format->bytes_per_pixel);

SDL_Log("Rmask: %08X Gmask: %08X Bmask: %08X Amask: %08X ", sur->format->Rmask, sur->format->Gmask, sur->format->Bmask, sur->format->Amask);

SDL_Log("Rshift: %u Gshift: %u Bshift: %u Ashift: %u ", sur->format->Rshift, sur->format->Gshift, sur->format->Bshift, sur->format->Ashift);

SDL_Log("Rloss: %u Gloss: %u Bloss: %u Aloss: %u ", sur->format->Rloss, sur->format->Gloss, sur->format->Bloss, sur->format->Aloss);

}

I still evaluated my Surface.

INFO: format: 390076419

INFO: bit per pixel: 24 bytes per Pixel: 3

INFO: Rmask: 00FF0000 Gmask: 0000FF00 Bmask: 000000FF Amask: 00000000

INFO: Rshift: 16 Gshift: 8 Bshift: 0 Ashift: 0

INFO: Rloss: 0 Gloss: 0 Bloss: 0 Aloss: 8

Sorry, there just isn’t much in the LoadBMP_IO() function that is affected by user modified means, I couldn’t find anything that would let us suggest a preferred default format.

So for right now the best option that I know of is to use SDL_ConvertSurfaceFormat to reformat the data.

#include <SDL3/SDL.h>

SDL_Surface * loadBMP_RGB(const char * filePath)

{

SDL_Surface * surf = SDL_LoadBMP(filePath);

SDL_Surface * dest = SDL_ConvertSurfaceFormat(surf, SDL_PIXELFORMAT_RGB24);

SDL_DestroySurface(surf);

return dest;

}

int main()

{

SDL_Init(SDL_INIT_VIDEO);

SDL_Surface * surf = loadBMP_RGB("a.bmp");

SDL_Log(SDL_GetPixelFormatName(surf->format->format));

SDL_DestroySurface(surf);

SDL_Quit();

}

I think the various image loading functions just try to preserve the format that is used in the files (even if that is not a guarantee). If you need a different format just convert it as you said.

I don’t know where you see this but I think “RGB format” could mean any format that contains R, G and B components regardless of order.

24bit Bitmaps internally store the PixelData in BGR order (see BMP file format - Wikipedia).

So for SDL_LoadBMP the easiest and fastest way to create a SDL_Surface from a 24bit BMP is creating one in BGR format.

@Peter87: You are right, it was this comment about surface creation that threw me off

/* Create a compatible surface, note that the colors are RGB ordered */

There was no promise that it would return specifically a RGB formatted surface. The format of the surface is auto-generated from the color masks which are defined in the switch statement above the comment.

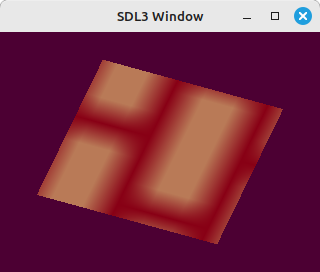

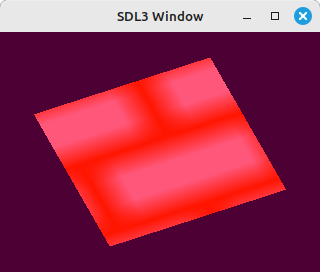

The conversion would work if I made a 24-bit image as the target.

surfaceTexture = SDL_ConvertSurfaceFormat(surfaceBMP, SDL_PIXELFORMAT_RGB24); 24bit

...

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, surfaceTexture->w, surfaceTexture->h, 0, GL_RGB, GL_UNSIGNED_BYTE, surfaceTexture->pixels);

But if I try with a 32 bit format, the colors are no longer correct. The pixel position would still be correct.

surfaceTexture = SDL_ConvertSurfaceFormat(surfaceBMP, SDL_PIXELFORMAT_RGBA8888); // 32bit

...

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, surfaceTexture->w, surfaceTexture->h, 0, GL_RGBA, GL_UNSIGNED_BYTE, surfaceTexture->pixels);

But if I use SDL_PIXELFORMAT_ABGR8888, then 32bit works too.

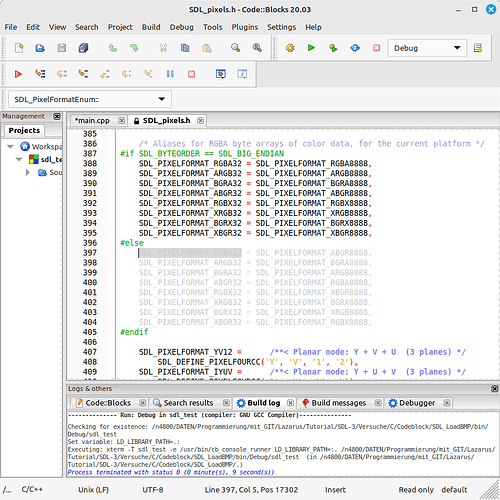

Now I stumbled upon something strange.

If I search for SDL_PIXELFORMAT_RGBA32 in code::blocks, it jumps to the gray area, see appendix.

Looks like a big/little endian problem to me.

Exactly. Most 32bit SDL_PIXELFORMAT types are based on uint32_t, and and the “first” byte is the lowest one in the integer - where that is in memory depends on little vs big endian.

SDL_PIXELFORMAT_RGBA32 is byte-wise RGBA on both little and big-endian platforms, and implemented as an alias for the corresponding platform-specific uint32_t-based type.

No idea why code::blocks jumps to that line, the correct one would obviously be 388

No idea why code::blocks greys out that block - it’s the correct line, but obviously the not-SDL_BIG_ENDIAN (=> little endian) case should be active and the big endian case greyed out.

Somehow this twist is completely illogical to me. If I need a 24bit texture, I have to use SDL_PIXELFORMAT_RGB24 (RGB) when converting and if I need 32bit, I have to use SDL_PIXELFORMAT_ABGR32 (BGR).

OpenGLSE side it is GL_RGB or GL_RGBA both RGB.

So it will probably be best to avoid the SDL_PIXELFORMAT_xxxx8888 formats and use the SDL_PIXELFORMAT_xxxx32/24?

If I need a 24bit texture, I have to use

SDL_PIXELFORMAT_RGB24(RGB) when converting and if I need 32bit, I have to useSDL_PIXELFORMAT_ABGR32(BGR).

shouldn’t that be SDL_PIXELFORMAT_RGBA32?

RGB24 is byte-wise RGB, RGBA32 is byte-wise RGBA

if you need formats that are specified byte-wise (instead of per uint), you should use the ones with 32/24 at the end.

Now I see the point of it all. …RGBA32 is needed for a byte array and …RGBA for a DWORD array.

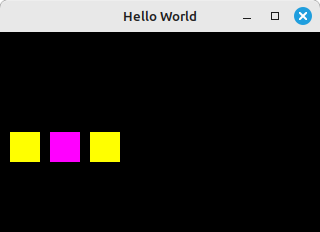

You can see it with printSurface and you can also see it clearly with SDL_BlitSurfaceScaled.

#include <SDL3/SDL.h>

void printSurface(SDL_Surface *sur) {

unsigned char *ch ;

ch = (unsigned char*) sur->pixels;

SDL_Log("Pixel: %2X %2X %2X %2X ", ch[0], ch[1], ch[2], ch[3]);

SDL_Log("format: %u", sur->format->format);

SDL_Log("bit per pixel: %u bytes per Pixel: %u", sur->format->bits_per_pixel, sur->format->bytes_per_pixel);

SDL_Log("Rmask: %08X Gmask: %08X Bmask: %08X Amask: %08X ", sur->format->Rmask, sur->format->Gmask, sur->format->Bmask, sur->format->Amask);

SDL_Log("Rshift: %u Gshift: %u Bshift: %u Ashift: %u ", sur->format->Rshift, sur->format->Gshift, sur->format->Bshift, sur->format->Ashift);

SDL_Log("Rloss: %u Gloss: %u Bloss: %u Aloss: %u\n\n", sur->format->Rloss, sur->format->Gloss, sur->format->Bloss, sur->format->Aloss);

}

int main(int argc, char *argv[])

{

uint8_t pixels_1[] = {0xFF, 0xFF, 0x00, 0xFF};

uint32_t pixels_2[] = {0xFFFF00FF};

SDL_Init(SDL_INIT_VIDEO);

SDL_Window *win = SDL_CreateWindow("Bit/Little-Endian", 320, 200, SDL_WINDOW_RESIZABLE);

SDL_Surface *winSurface = SDL_GetWindowSurface(win);

// io.

SDL_Surface *Surf1 = SDL_CreateSurface(1, 1, SDL_PIXELFORMAT_RGBA32);

SDL_memcpy(Surf1->pixels, &pixels_1, sizeof(pixels_1));

printSurface(Surf1);

// warped

SDL_Surface *Surf2 = SDL_CreateSurface(1, 1, SDL_PIXELFORMAT_RGBA32);

SDL_memcpy(Surf2->pixels, &pixels_2, sizeof(pixels_2));

printSurface(Surf2);

// io.

SDL_Surface *Surf3 = SDL_CreateSurface(1, 1, SDL_PIXELFORMAT_RGBA8888);

SDL_memcpy(Surf3->pixels, &pixels_2, sizeof(pixels_2));

printSurface(Surf3);

SDL_Rect r;

r = {10, 100, 30, 30};

SDL_BlitSurfaceScaled(Surf1, nullptr, winSurface, &r, SDL_SCALEMODE_NEAREST);

r = {50, 100, 30, 30};

SDL_BlitSurfaceScaled(Surf2, nullptr, winSurface, &r, SDL_SCALEMODE_NEAREST);

r = {90, 100, 30, 30};

SDL_BlitSurfaceScaled(Surf3, nullptr, winSurface, &r, SDL_SCALEMODE_NEAREST);

SDL_UpdateWindowSurface(win);

SDL_Delay(5000); // for Test

SDL_DestroyWindow(win);

SDL_DestroySurface(winSurface);

SDL_DestroySurface(Surf1);

SDL_DestroySurface(Surf2);

SDL_DestroySurface(Surf3);

SDL_Quit();

return 0;

}