Hi everyone! I am new to this forum and I take this entry to greet this whole community. When I use the SDL_RENDER_ACCELERATED flag in the SDL_CreateRenderer function, I get strange graphics when scaling the window using SDL_RenderSetLogicalSize, which does not happen if I use the SDL_RENDERER_SOFTWARE flag. It is striking to enable hardware-accelerated rendering due to the remarkable performance that is obtained, but the graphic result is unpleasant. Why it happens?

Sorry for having to upload the images to another domain, but I’m a new user and the forum does not allow me to place more than one at the moment.

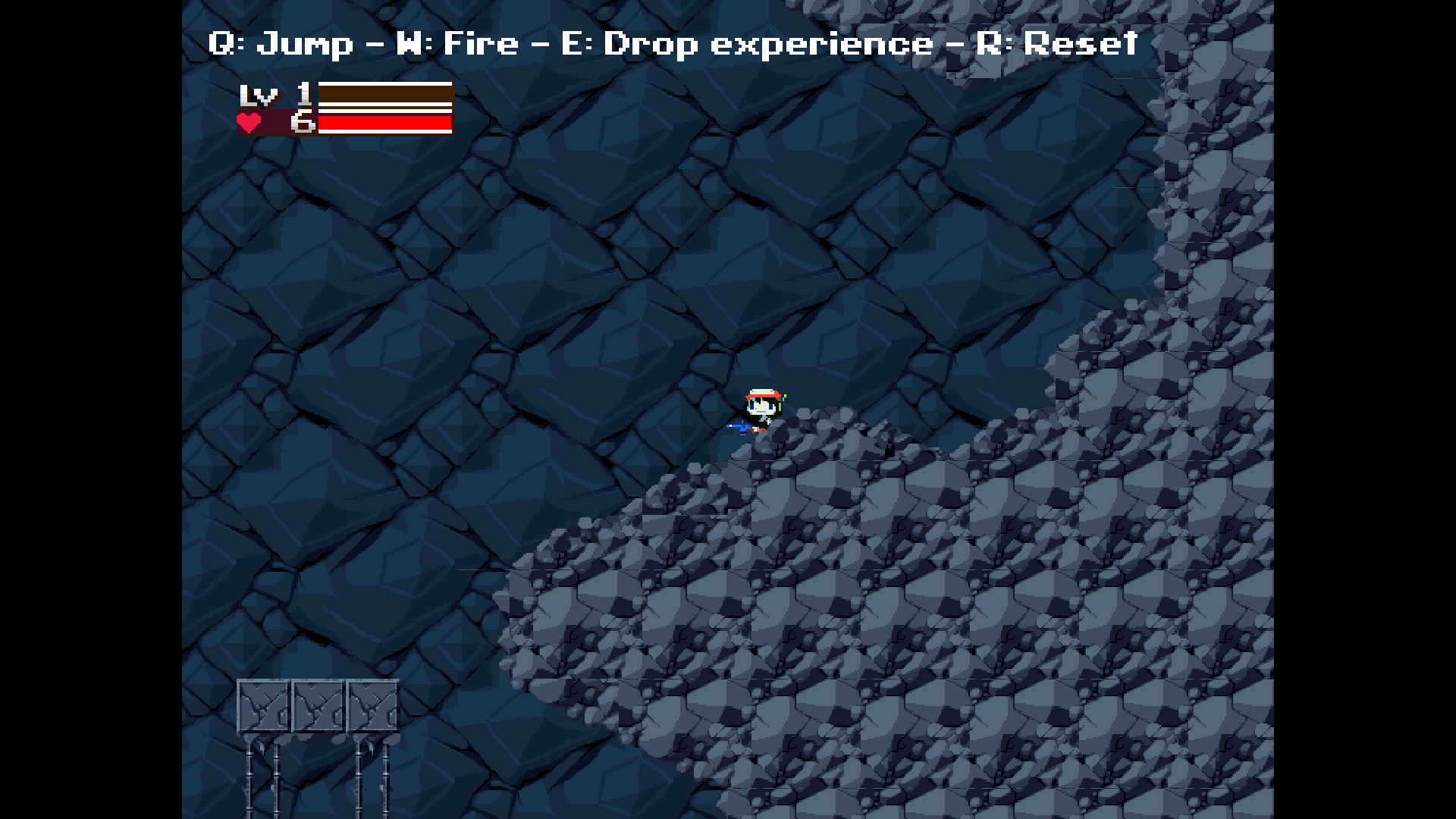

SDL_RENDER_ACCELERATED, look at the weird lines that form on each tile:

SDL_RENDER_ACCELERATED with SDL_SetHint(SDL_HINT_RENDER_SCALE_QUALITY, “1”), the problem is more noticeable:

https://ibb.co/b6PWZDP

SDL_RENDER_SOFTWARE (how I want it to really look):

https://ibb.co/x7BV4pY

This is graphic class, where I carry out all the necessary functions to draw (graphics.cpp):

#include "graphics.h"

#include "game.h"

#include <SDL2/SDL.h>

Graphics::Graphics() {

window_ = SDL_CreateWindow("Cave Story Clone - SDL2",

SDL_WINDOWPOS_CENTERED, SDL_WINDOWPOS_CENTERED,

units::tileToPixel(Game::kScreenWidth),

units::tileToPixel(Game::kScreenHeight),

0);

// TODO: fix weird graphics when is used SDL_RENDERER_ACCELERATED

renderer_ = SDL_CreateRenderer(window_, -1,

SDL_RENDERER_ACCELERATED | SDL_RENDERER_TARGETTEXTURE);

SDL_RenderSetLogicalSize(renderer_, units::tileToPixel(Game::kScreenWidth),

units::tileToPixel(Game::kScreenHeight));

SDL_ShowCursor(SDL_DISABLE);

}

Graphics::~Graphics() {

for (SpriteMap::iterator iter = spr_sheets_.begin();

iter != spr_sheets_.end();

iter++) {

SDL_DestroyTexture(iter->second);

}

SDL_DestroyRenderer(renderer_);

SDL_DestroyWindow(window_);

}

SDL_Texture* Graphics::surfaceToTexture(SDL_Surface* surface) {

return SDL_CreateTextureFromSurface(renderer_, surface);

}

Graphics::SurfaceID Graphics::loadImage(const std::string& file_name, bool black_to_alpha) {

const std::string file_path = config::getGraphicsQuality() == config::ORIGINAL ?

"assets/" + file_name + ".pbm" :

"assets/" + file_name + ".bmp";

// if we have not loaded in the spritesheet

if (spr_sheets_.count(file_path) == 0) {

// load it in now

SDL_Surface* image = SDL_LoadBMP(file_path.c_str());

if (!image) {

fprintf(stderr, "Could not find image: %s\n", file_path.c_str());

exit(EXIT_FAILURE);

}

if (black_to_alpha) {

const Uint32 black_color = SDL_MapRGB(image->format, 0, 0, 0);

SDL_SetColorKey(image, SDL_TRUE, black_color);

}

spr_sheets_[file_path] = surfaceToTexture(image);//SDL_CreateTextureFromSurface(renderer_, image);

SDL_FreeSurface(image);

}

return spr_sheets_[file_path];

}

void Graphics::render(

SurfaceID source,

SDL_Rect* source_rectangle,

SDL_Rect* destination_rectangle) {

if (source_rectangle) {

destination_rectangle->w = source_rectangle->w;

destination_rectangle->h = source_rectangle->h;

} else {

uint32_t format;

int access, w, h;

SDL_QueryTexture(source, &format, &access, &w, &h);

destination_rectangle->w = w;

destination_rectangle->h = h;

}

SDL_RenderCopy(renderer_, source, source_rectangle, destination_rectangle);

}

void Graphics::clear() {

SDL_RenderClear(renderer_);

}

void Graphics::flip() {

SDL_RenderPresent(renderer_);

}

void Graphics::setFullscreen() {

windowed = !windowed;

if (windowed) {

SDL_SetWindowFullscreen(window_, 0);

} else {

SDL_SetWindowFullscreen(window_, SDL_WINDOW_FULLSCREEN_DESKTOP);

}

}

And this is my sprite class (sprite.cpp):

#include "sprite.h"

#include "graphics.h"

Sprite::Sprite(

Graphics& graphics,

const std::string& file_name,

units::Pixel source_x, units::Pixel source_y,

units::Pixel width, units::Pixel height) {

const bool black_to_alpha = true;

spr_sheet_ = graphics.loadImage(file_name, black_to_alpha);

source_rect_.x = source_x;

source_rect_.y = source_y;

source_rect_.w = width;

source_rect_.h = height;

}

void Sprite::draw(Graphics& graphics, units::Game x, units::Game y, SDL_Rect* camera) {

SDL_Rect destination_rectangle;

if (!camera) {

destination_rectangle.x = units::gameToPixel(x);

destination_rectangle.y = units::gameToPixel(y);

} else {

destination_rectangle.x = units::gameToPixel(x) - camera->x;

destination_rectangle.y = units::gameToPixel(y) - camera->y;

}

graphics.render(spr_sheet_, &source_rect_, &destination_rectangle);

}

It should be noted that each object on the screen is an individual sprite, and the lines are formed in each of them.

The program runs with a resolution of 640x480, and the resolution of my monitor, to which it is scaled, is 1920x1080. I use Arch Linux with X11 and I’m not calling OpenGL functions to draw, only the SDL2 API for rendering.

I feel my bad english, because I am really spanish speaking. Thanks for everything, even for taking the time to read me, and happy day for everyone.

¡Gracias a todos!